Chrome | Firefox | |

|---|---|---|

Windows | + | + |

Mac OS | + | + |

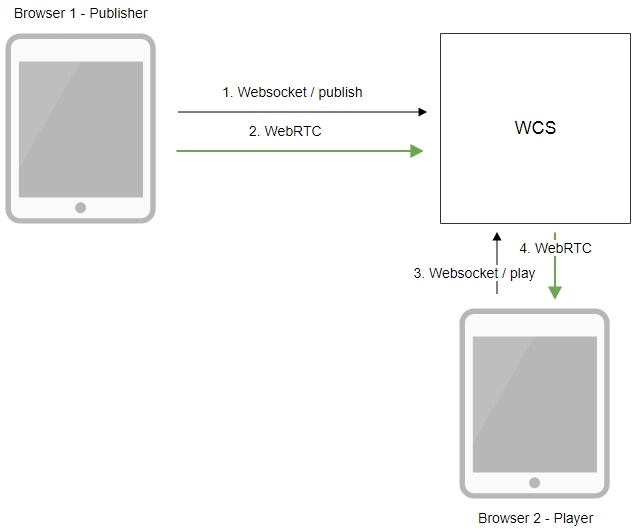

1. The browser establishes a connection to the server via the Websocket protocol and sends the publish command.

2. The browser captures the screen and sends a WebRTC stream to the server.

3. The second browser establishes a connection also via Websokcet and sends the play command.

4. The second browser receives the WebRTC stream and plays the stream on the page.

1. For the test we use the demo server at demo.flashphoner.com and the Screen Sharing web application in the Chrome browser

https://demo.flashphoner.com/client2/examples/demo/streaming/screen-sharing/screen-sharing.html

2. Click the "Start" button. The browser asks for permission to access the screen, and screen capturing starts, then the stream is publishing:

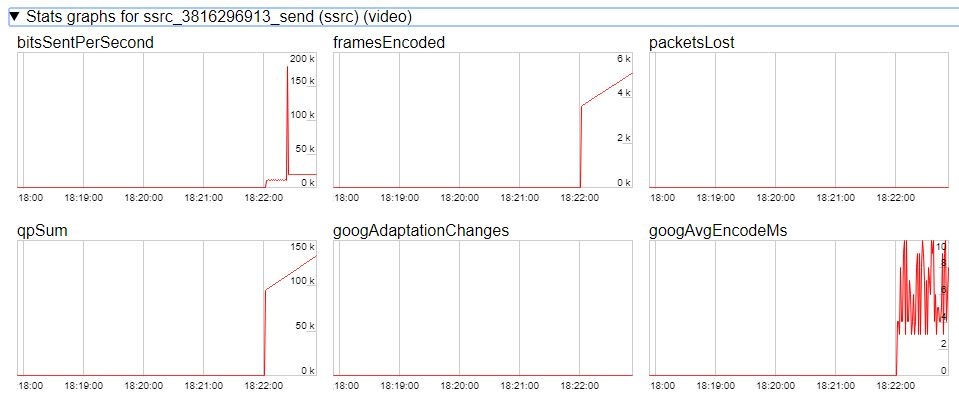

3. Make sure the stream is sent to the server and the system operates normally in chrome://webrtc-internals

4. Open Two Way Streaming in a new window, click Connect and specify the stream id, then click Play.

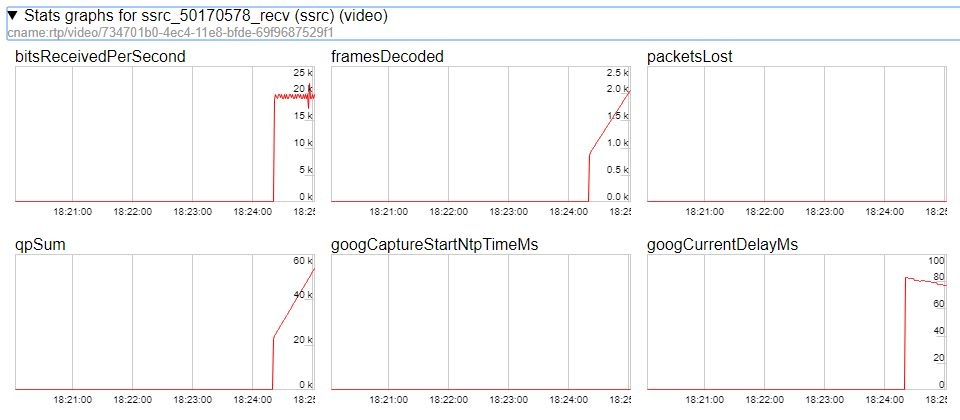

5. Playback diagrams in chrome://webrtc-internals

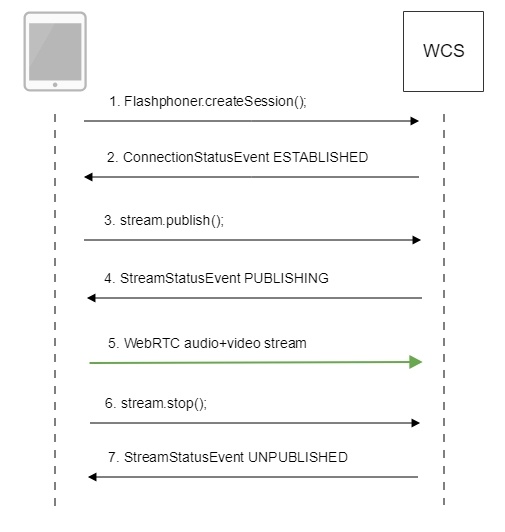

Below is the call flow when using the Screen Sharing example

1. Checking if the extension install is required

Browser.isFirefox(), Browser.isChrome(); code

if (Browser.isFirefox()) {

$("#installExtensionButton").show();

...

} else if (Browser.isChrome()) {

$('#mediaSourceForm').hide();

interval = setInterval(function() {

chrome.runtime.sendMessage(extensionId, {type: "isInstalled"}, function (response) {

. if (response) {

$("#extension").hide();

clearInterval(interval);

onExtensionAvailable();

} else {

(inIframe()) ? $("#installFromMarket").show() : $("#installExtensionButton").show();

}

});

}, 500);

} else {

$("#notify").modal('show');

return false;

} |

2. Establishing a connection to the server.

Flashphoner.createSession(); code

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function(session){

//session connected, start streaming

startStreaming(session);

}).on(SESSION_STATUS.DISCONNECTED, function(){

setStatus(SESSION_STATUS.DISCONNECTED);

onStopped();

}).on(SESSION_STATUS.FAILED, function(){

setStatus(SESSION_STATUS.FAILED);

onStopped();

}); |

3. Receiving from the server an event confirming successful connection.

ConnectionStatusEvent ESTABLISHED code

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function(session){

//session connected, start streaming

startStreaming(session);

...

}); |

4. Publishing the stream.

stream.publish(); code

session.createStream({

name: streamName,

display: localVideo,

constraints: constraints

}).on(STREAM_STATUS.PUBLISHING, function(publishStream){

...

}).on(STREAM_STATUS.UNPUBLISHED, function(){

...

}).on(STREAM_STATUS.FAILED, function(stream){

...

}).publish();

|

5. Receiving from the server an event confirming successful publishing.

StreamStatusEvent, статус PUBLISHING code

session.createStream({

name: streamName,

display: localVideo,

constraints: constraints

}).on(STREAM_STATUS.PUBLISHING, function(publishStream){

...

setStatus(STREAM_STATUS.PUBLISHING);

//play preview

session.createStream({

name: streamName,

display: remoteVideo

}).on(STREAM_STATUS.PLAYING, function(previewStream){

document.getElementById(previewStream.id()).addEventListener('resize', function(event){

resizeVideo(event.target);

});

//enable stop button

onStarted(publishStream, previewStream);

}).on(STREAM_STATUS.STOPPED, function(){

publishStream.stop();

}).on(STREAM_STATUS.FAILED, function(stream){

//preview failed, stop publishStream

if (publishStream.status() == STREAM_STATUS.PUBLISHING) {

setStatus(STREAM_STATUS.FAILED, stream);

publishStream.stop();

}

}).play();

}).on(STREAM_STATUS.UNPUBLISHED, function(){

...

}).on(STREAM_STATUS.FAILED, function(stream){

...

}).publish();

|

6. Sending the audio-video stream via WebRTC

7. Stopping publishing the stream.

stream.stop(); code

session.createStream({

name: streamName,

display: localVideo,

constraints: constraints

}).on(STREAM_STATUS.PUBLISHING, function(publishStream){

/*

* User can stop sharing screen capture using Chrome "stop" button.

* Catch onended video track event and stop publishing.

*/

document.getElementById(publishStream.id()).srcObject.getVideoTracks()[0].onended = function (e) {

publishStream.stop();

};

...

setStatus(STREAM_STATUS.PUBLISHING);

//play preview

session.createStream({

name: streamName,

display: remoteVideo

}).on(STREAM_STATUS.PLAYING, function(previewStream){

...

}).on(STREAM_STATUS.STOPPED, function(){

publishStream.stop();

}).on(STREAM_STATUS.FAILED, function(stream){

//preview failed, stop publishStream

if (publishStream.status() == STREAM_STATUS.PUBLISHING) {

setStatus(STREAM_STATUS.FAILED, stream);

publishStream.stop();

}

}).play();

...

}).publish();

|

8. Receiving from the server an event confirming unpublishing of the stream.

StreamStatusEvent, статус UNPUBLISHED code

session.createStream({

name: streamName,

display: localVideo,

constraints: constraints

}).on(STREAM_STATUS.PUBLISHING, function(publishStream){

...

}).on(STREAM_STATUS.UNPUBLISHED, function(){

setStatus(STREAM_STATUS.UNPUBLISHED);

//enable start button

onStopped();

}).on(STREAM_STATUS.FAILED, function(stream){

...

}).publish();

|

The screen sharing function can be used to publish a video stream that demonstrates the desktop or an application window.

WCS API uses a Chrome extensions for screen sharing. Firefox browser since version 52 does not require an extension.

1. If the web applicaiton is inside an iframe element, publishing of the video stream may fail.

Symptoms: IceServer errors in the browser console.

Solution: put the app out of iframe to an individual page.

2. If publishing of the stream goes under Windows 10 or Windows 8 and hardware acceleration is enabled in the Google Chrome browser, bitrate problems are possible.

Symptoms: low quality of the video, muddy picture, bitrate shown in chrome://webrtc-internals is less than 100 kbps.

Solution: turn off hardware acceleration in the browser, switch the browser of the server to use the VP8 codec.

3. All streams captured from the screen stop if any one of them stops

Symptoms: while multiple streams are captured from the screen on one tab in the Chrome browser, if one stream is stopped, all streams stop.

Solution: cache tracks by the source of the video and stop them along with the last stream that uses that source, for example:

var handleUnpublished = function(stream) {

console.log("Stream unpublished with status " + stream.status());

//get track label

var video = document.getElementById(stream.id() + LOCAL_CACHED_VIDEO);

var track = video.srcObject.getVideoTracks()[0];

var label = track.label;

//see if someone using this source

if (countDisplaysWithVideoLabel(label) > 1) {

//remove srcObject but don't stop tracks

pushTrack(track);

video.srcObject = null;

} else {

var tracks = popTracks(track);

for (var i = 0; i < tracks.length; i++) {

tracks[i].stop();

}

}

//release resources

Flashphoner.releaseLocalMedia(streamVideoDisplay);

//remove stream display

display.removeChild(streamDisplay);

session.disconnect();

};

|

4. Chrome browser stops sending video traffic when applicatiion window captured is minimized

Symptoms: if an application window captured is minimized to task bar, the stream freezes at subscribers side, and publishing may fail by RTP activity

Solution: in builds before 5.2.1784 disable RTP activity control for all the streams

rtp_activity_video=false |

in builds since 5.2.1784 disable video RTP activity control by stream name template, for example

rtp_activity_video_exclude=.*-screen$ |