| Table of Contents |

|---|

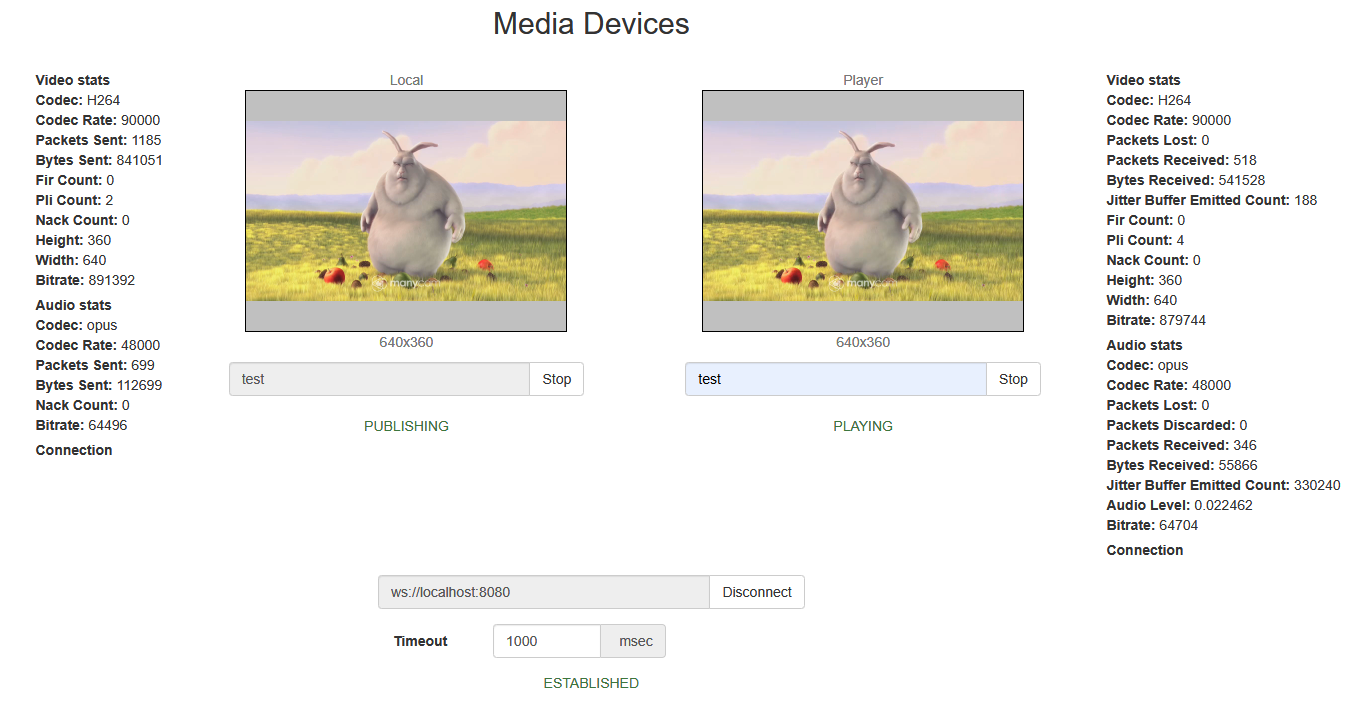

Example of streamer with access to media devices

This streamer can be used to publish the following types of or playback WebRTC streams on Web Call Server

- WebRTC

- RTMFP

- RTMP

and allows to select media devices and parameters for the published video

...

On the screenshot below a stream is being published from the client.

Two videos video elements are played displayed on the page

- 'Local' - video from the camera

- 'PreviewPlayer' - the video as received from the server

Code of the example

The path to the source code of the example on WCS server is:

...

Here host is the address of the WCS server.

...

Analyzing the code

To analyze the code, let's take the version of file manager.js with whith hash cf0daabc6b86e21d5a2f9e4605366c8b7f0d27eb ecbadc3, which is available here and can be downloaded with corresponding build 2.0.3.18.1894212.

1. Initialization of the API. line 10API is initialized after loading the page. For Flash support, the path to SWF file is passed to the

Flashphoner.init() method. code

| Code Block | ||||

|---|---|---|---|---|

| ||||

Flashphoner.init({

screenSharingExtensionId: extensionId,

mediaProvidersReadyCallback: function (mediaProviders) {

if (Flashphoner.isUsingTemasys()) {

$("#audioInputForm").hide();

$("#videoInputForm").hide();

}

}

}) |

2. List available input media devices. line 16After API initialization, list of available media devices (cameras and microphones) is requested

Flashphoner.getMediaDevices() code

When input media devices are listed, drop-down lists of microphones and cameras on client page are filled.

| Code Block | ||||

|---|---|---|---|---|

|

...

Flashphoner.getMediaDevices(null, true).then(function (list) {

list.audio.forEach(function (device) {

...

});

list.video.forEach(function (device) {

...

});

...

}).catch(function (error) {

$("#notifyFlash").text("Failed to get media devices");

}); |

3. List available output media devices

Flashphoner.getMediaDevices() code

When output media devices are listed, drop-down lists of spakers and headphones on the client page are filled.

In case of failure, warning "Failed to get access to media devices" is displayed.

3. Connection to server. line 100

Connection to server is established when Start button is clicked.

| Code Block | ||||

|---|---|---|---|---|

| ||||

Flashphoner.getMediaDevices(null, true, MEDIA_DEVICE_KIND.OUTPUT).then(function (list) {

list.audio.forEach(function (device) {

...

});

...

}).catch(function (error) {

$("#notifyFlash").text("Failed to get media devices");

}); |

4. Get audio and video publishing constraints from client page

getConstraints() code

Publishing sources:

- camera (sendVideo)

- microphone (sendAudio)

| Code Block | ||||

|---|---|---|---|---|

| ||||

constraints = {

audio: $("#sendAudio").is(':checked'),

video: $("#sendVideo").is(':checked'),

};

|

Audio constraints:

- microphone choise (deviceId)

- error correction for Opus codec (fec)

- stereo mode (stereo)

- audio bitrate (bitrate)

| Code Block | ||||

|---|---|---|---|---|

| ||||

if (constraints.audio) {

constraints.audio = {

deviceId: $('#audioInput').val()

};

if ($("#fec").is(':checked'))

constraints.audio.fec = $("#fec").is(':checked');

if ($("#sendStereoAudio").is(':checked'))

constraints.audio.stereo = $("#sendStereoAudio").is(':checked');

if (parseInt($('#sendAudioBitrate').val()) > 0)

constraints.audio.bitrate = parseInt($('#sendAudioBitrate').val());

}

|

Video constraints:

- camera choise (deviceId)

- publishing video size (width, height)

- minimal and maximal video bitrate (minBitrate, maxBitrate)

- FPS (frameRate)

| Code Block | ||||

|---|---|---|---|---|

|

Session is created with method createSession(). Callback function, which will be called in case of successfully established connection (status SESSION_STATUS.ESTABLISHED), is added.

4. Access to media. line 125

After establishing connection to the server, access to media (audio and video from the selected camera and microphone) is requested.

The following parameters are passed to method Flashphoner.getMediaAccess() used to get the access

...

constraints.video = {

deviceId: {exact: $('#videoInput').val()},

width: parseInt($('#sendWidth').val()),

height: parseInt($('#sendHeight').val())

};

if (Browser.isSafariWebRTC() && Browser.isiOS() && Flashphoner.getMediaProviders()[0] === "WebRTC") {

constraints.video.deviceId = {exact: $('#videoInput').val()};

}

if (parseInt($('#sendVideoMinBitrate').val()) > 0)

constraints.video.minBitrate = parseInt($('#sendVideoMinBitrate').val());

if (parseInt($('#sendVideoMaxBitrate').val()) > 0)

constraints.video.maxBitrate = parseInt($('#sendVideoMaxBitrate').val());

if (parseInt($('#fps').val()) > 0)

constraints.video.frameRate = parseInt($('#fps').val());

|

5. Get access to media devices for local test

Flashphoner.getMediaAccess() code

Audio and video constraints and <div>-element to display captured video are passed to the method.

| Code Block | ||||

|---|---|---|---|---|

| ||||

Flashphoner.getMediaAccess(getConstraints(), localVideo).then(function (disp) {

$("#testBtn").text("Release").off('click').click(function () {

$(this).prop('disabled', true);

stopTest();

}).prop('disabled', false);

...

testStarted = true;

}).catch(function (error) {

$("#testBtn").prop('disabled', false);

testStarted = false;

}); |

6. Connecting to the server

Flashphoner.createSession() code

| Code Block | ||||

|---|---|---|---|---|

| ||||

Flashphoner.createSession({urlServer: url, timeout: tm}).on(SESSION_STATUS.ESTABLISHED, function (session) {

...

}).on(SESSION_STATUS.DISCONNECTED, function () {

...

}).on(SESSION_STATUS.FAILED, function () {

...

}); |

7. Receiving the event confirming successful connection

ConnectionStatusEvent ESTABLISHED code

| Code Block | ||||

|---|---|---|---|---|

|

...

Flashphoner.createSession({urlServer: url, timeout: tm}).on(SESSION_STATUS.ESTABLISHED, function (session) {

setStatus("#connectStatus", session.status());

onConnected(session);

...

}); |

8. Stream publishing

session.createStream(), and function publish() is called to publish the stream (line 126).

When stream is created, the following parameters are passed

- streamName - name of the stream

- localVideo - <div> element, in which video from the camera will be displayed

When stream is created, callback functions for events STREAM_STATUS.PUBLISHING, STREAM_STATUS.UNPUBLISHED, STREAM_STATUS.FAILED can be added.

STREAM_STATUS.PUBLISHING - when this status is received,

- function resizeLocalVideo() is called to change resolution of video from the camera in accordance to the specified on the client page and adapt to <div> element localVideo, in which the video will be displayed (line 134)

- preview video stream is created with method session.createStream(), and function play() is called to start playback of the stream in <div> element remoteVideo (line 141)

When preview stream is created, callback functions for events STREAM_STATUS.PLAYING, STREAM_STATUS.STOPPED, STREAM_STATUS.FAILED are added.

STREAM_STATUS.PLAYING - when this status is received, function resizeVideo() is called, which is used in the examples to adapt resolution to the element, in which the video will be displayed.

STREAM_STATUS.UNPUBLISHED and STREAM_STATUS.FAILED - when one of these statuses is received, function onStopped() of the example is called to make appropriate changes in controls of the interface.

5. Stop of playback. line 67

The following method is called to stop playback of preview video streampublishStream.publish() code

| Code Block | ||||

|---|---|---|---|---|

| ||||

publishStream = session.createStream({

name: streamName,

display: localVideo,

cacheLocalResources: true,

constraints: constraints,

mediaConnectionConstraints: mediaConnectionConstraints,

sdpHook: rewriteSdp,

transport: transportInput,

cvoExtension: cvo,

stripCodecs: strippedCodecs,

videoContentHint: contentHint

...

});

publishStream.publish(); |

9. Receiving the event confirming successful streaming

StreamStatusEvent PUBLISHING code

| Code Block | ||||

|---|---|---|---|---|

| ||||

publishStream = session.createStream({

...

}).on(STREAM_STATUS.PUBLISHING, function (stream) {

$("#testBtn").prop('disabled', true);

var video = document.getElementById(stream.id());

//resize local if resolution is available

if (video.videoWidth > 0 && video.videoHeight > 0) {

resizeLocalVideo({target: video});

}

enablePublishToggles(true);

if ($("#muteVideoToggle").is(":checked")) {

muteVideo();

}

if ($("#muteAudioToggle").is(":checked")) {

muteAudio();

}

//remove resize listener in case this video was cached earlier

video.removeEventListener('resize', resizeLocalVideo);

video.addEventListener('resize', resizeLocalVideo);

publishStream.setMicrophoneGain(currentGainValue);

setStatus("#publishStatus", STREAM_STATUS.PUBLISHING);

onPublishing(stream);

}).on(STREAM_STATUS.UNPUBLISHED, function () {

...

}).on(STREAM_STATUS.FAILED, function () {

...

});

publishStream.publish(); |

10. Stream playback

session.createStream(), previewStream.play() code

| Code Block | ||||

|---|---|---|---|---|

| ||||

previewStream = session.createStream({

name: streamName,

display: remoteVideo,

constraints: constraints,

transport: transportOutput,

stripCodecs: strippedCodecs

...

});

previewStream.play(); |

11. Receiving the event confirming successful playback

StreamStatusEvent PLAYING code

| Code Block | ||||

|---|---|---|---|---|

| ||||

previewStream = session.createStream({

...

}).on(STREAM_STATUS.PLAYING, function (stream) {

playConnectionQualityStat.connectionQualityUpdateTimestamp = new Date().valueOf();

setStatus("#playStatus", stream.status());

onPlaying(stream);

document.getElementById(stream.id()).addEventListener('resize', function (event) {

$("#playResolution").text(event.target.videoWidth + "x" + event.target.videoHeight);

resizeVideo(event.target);

});

//wait for incoming stream

if (Flashphoner.getMediaProviders()[0] == "WebRTC") {

setTimeout(function () {

if(Browser.isChrome()) {

detectSpeechChrome(stream);

} else {

detectSpeech(stream);

}

}, 3000);

}

...

});

previewStream.play(); |

12. Stop stream playback

stream.stop() code

| Code Block | ||||

|---|---|---|---|---|

| ||||

$("#playBtn").text("Stop").off('click').click(function () {

$(this).prop('disabled', true);

stream.stop();

}).prop('disabled', false); |

13. Receiving the event confirming successful playback stop

StreamStatusEvent STOPPED code

| Code Block | ||||

|---|---|---|---|---|

| ||||

previewStream = session.createStream({

...

}).on(STREAM_STATUS.STOPPED, function () {

setStatus("#playStatus", STREAM_STATUS.STOPPED);

onStopped();

...

});

previewStream.play(); |

14. Stop stream publishing

stream.stop() code

| Code Block | ||||

|---|---|---|---|---|

| ||||

$("#publishBtn").text("Stop").off('click').click(function () {

$(this).prop('disabled', true);

stream.stop();

}).prop('disabled', false); |

15. Receiving the event confirming successful publishsing stop

StreamStatusEvent UNPUBLISHED code

| Code Block | ||||

|---|---|---|---|---|

| ||||

publishStream = session.createStream({

...

}).on(STREAM_STATUS.UNPUBLISHED, function () {

setStatus("#publishStatus", STREAM_STATUS.UNPUBLISHED);

onUnpublished();

...

});

publishStream.publish(); |

16. Mute publisher audio

stream.muteAudio() code:

| Code Block | ||||

|---|---|---|---|---|

| ||||

function muteAudio() {

if (publishStream) {

publishStream.muteAudio();

}

} |

17. Mute publisher video

stream.muteVideo() code:

| Code Block | ||||

|---|---|---|---|---|

| ||||

function muteVideo() {

if (publishStream) {

publishStream.muteVideo();

}

} |

18. Show WebRTC stream publishing statistics

stream.getStats() code:

| Code Block | ||||

|---|---|---|---|---|

| ||||

publishStream.getStats(function (stats) {

if (stats && stats.outboundStream) {

if (stats.outboundStream.video) {

showStat(stats.outboundStream.video, "outVideoStat");

let vBitrate = (stats.outboundStream.video.bytesSent - videoBytesSent) * 8;

if ($('#outVideoStatBitrate').length == 0) {

let html = "<div>Bitrate: " + "<span id='outVideoStatBitrate' style='font-weight: normal'>" + vBitrate + "</span>" + "</div>";

$("#outVideoStat").append(html);

} else {

$('#outVideoStatBitrate').text(vBitrate);

}

videoBytesSent = stats.outboundStream.video.bytesSent;

...

}

if (stats.outboundStream.audio) {

showStat(stats.outboundStream.audio, "outAudioStat");

let aBitrate = (stats.outboundStream.audio.bytesSent - audioBytesSent) * 8;

if ($('#outAudioStatBitrate').length == 0) {

let html = "<div>Bitrate: " + "<span id='outAudioStatBitrate' style='font-weight: normal'>" + aBitrate + "</span>" + "</div>";

$("#outAudioStat").append(html);

} else {

$('#outAudioStatBitrate').text(aBitrate);

}

audioBytesSent = stats.outboundStream.audio.bytesSent;

}

}

...

}); |

19. Show WebRTC stream playback statistics

stream.getStats() code:

| Code Block | ||||

|---|---|---|---|---|

| ||||

previewStream.getStats(function (stats) {

if (stats && stats.inboundStream) {

if (stats.inboundStream.video) {

showStat(stats.inboundStream.video, "inVideoStat");

let vBitrate = (stats.inboundStream.video.bytesReceived - videoBytesReceived) * 8;

if ($('#inVideoStatBitrate').length == 0) {

let html = "<div>Bitrate: " + "<span id='inVideoStatBitrate' style='font-weight: normal'>" + vBitrate + "</span>" + "</div>";

$("#inVideoStat").append(html);

} else {

$('#inVideoStatBitrate').text(vBitrate);

}

videoBytesReceived = stats.inboundStream.video.bytesReceived;

...

}

if (stats.inboundStream.audio) {

showStat(stats.inboundStream.audio, "inAudioStat");

let aBitrate = (stats.inboundStream.audio.bytesReceived - audioBytesReceived) * 8;

if ($('#inAudioStatBitrate').length == 0) {

let html = "<div style='font-weight: bold'>Bitrate: " + "<span id='inAudioStatBitrate' style='font-weight: normal'>" + aBitrate + "</span>" + "</div>";

$("#inAudioStat").append(html);

} else {

$('#inAudioStatBitrate').text(aBitrate);

}

audioBytesReceived = stats.inboundStream.audio.bytesReceived;

}

...

}

}); |

20. Speech detection using ScriptProcessor interface (any browser except Chrome)

audioContext.createMediaStreamSource(), audioContext.createScriptProcessor() code

...

| Code Block | ||||

|---|---|---|---|---|

|

After calling the method, status STREAM_STATUS.STOPPED should arrive to confirm stop of playback from the server side.

6. Stop of streaming after stop of preview playback. line 151

function detectSpeech(stream, level, latency) {

var mediaStream = document.getElementById(stream.id()).srcObject;

var source = audioContext.createMediaStreamSource(mediaStream);

var processor = audioContext.createScriptProcessor(512);

processor.onaudioprocess = handleAudio;

processor.connect(audioContext.destination);

processor.clipping = false;

processor.lastClip = 0;

// threshold

processor.threshold = level || 0.10;

processor.latency = latency || 750;

processor.isSpeech =

function () {

if (!this.clipping) return false;

if ((this.lastClip + this.latency) < window.performance.now()) this.clipping = false;

return this.clipping;

};

source.connect(processor);

// Check speech every 500 ms

speechIntervalID = setInterval(function () {

if (processor.isSpeech()) {

$("#talking").css('background-color', 'green');

} else {

$("#talking").css('background-color', 'red');

}

}, 500);

} |

Audio data handler code

| Code Block | ||||

|---|---|---|---|---|

| ||||

function handleAudio(event) {

var buf = event.inputBuffer.getChannelData(0);

var bufLength = buf.length;

var x;

for (var i = 0; i < bufLength; i++) {

x = buf[i];

if (Math.abs(x) >= this.threshold) {

this.clipping = true;

this.lastClip = window.performance.now();

}

}

} |

21. Speech detection using incoming audio WebRTC statistics in Chrome browser

stream.getStats() code

| Code Block | ||||

|---|---|---|---|---|

|

...

function detectSpeechChrome(stream, level, latency) {

statSpeechDetector.threshold = level || 0.010;

statSpeechDetector.latency = latency || 750;

statSpeechDetector.clipping = false;

statSpeechDetector.lastClip = 0;

speechIntervalID = setInterval(function() {

stream.getStats(function(stat) {

let audioStats = stat.inboundStream.audio;

if(!audioStats) {

return;

}

// Using audioLevel WebRTC stats parameter

if (audioStats.audioLevel >= statSpeechDetector.threshold) {

statSpeechDetector.clipping = true;

statSpeechDetector.lastClip = window.performance.now();

}

if ((statSpeechDetector.lastClip + statSpeechDetector.latency) < window.performance.now()) {

statSpeechDetector.clipping = false;

}

if (statSpeechDetector.clipping) {

$("#talking").css('background-color', 'green');

} else {

$("#talking").css('background-color', 'red');

}

});

},500);

} |