Overview

Supported platforms and browsers

Chrome 66+ | Firefox 59+ | Safari 11.1 | |

|---|---|---|---|

Windows | + | + | |

Mac OS | + | + | + |

Android | + | + | |

iOS | - | - | + |

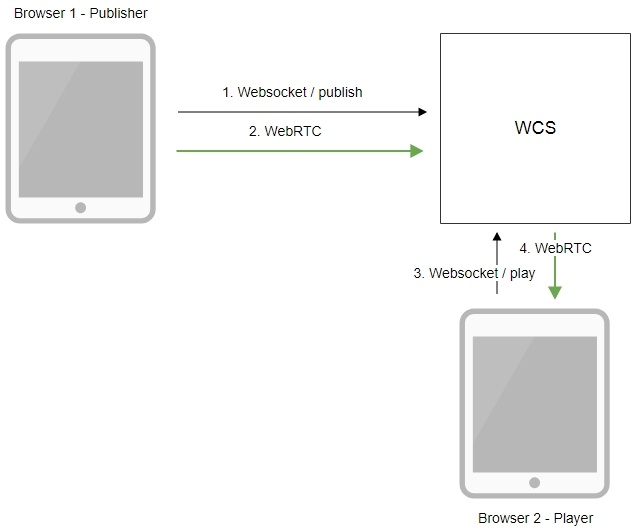

Operation flowchart

1. The browser establishes a connection to the server via the Websocket protocol and sends the publish command.

2. The browser captures the image of an HTML5 Canvas element and sends a WebRTC stream to the server.

3. The second browser establishes a connection also via Websokcet and sends the play command.

4. The second browser receives the WebRTC stream and plays the stream on the page.

Quick manual on testing

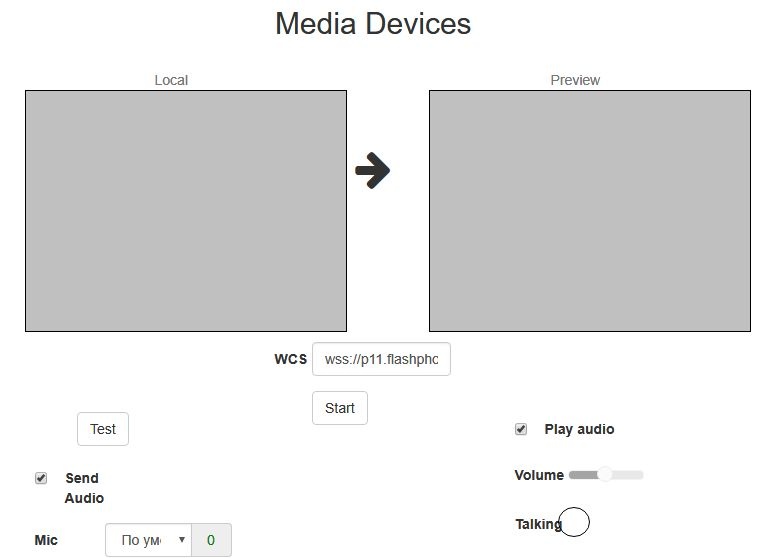

Capturing a video stream from HTML5 Canvas and preparing for publishing

1. For the test we use the demo server at demo.flashphoner.com and the Media Devices web application in the Chrome browser

2. In the "Send Video" section select "Canvas"

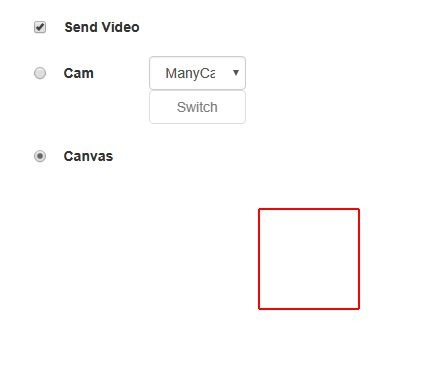

3. Click the "Start" button. Broadcasting of the image on the HTML5 Canvas (red frame) starts:

4. Make sure the stream is sent to the server and the system operates normally in chrome://webrtc-internals

5. Open Two Way Streaming in a new window, click Connect and specify the stream id, then click Play.

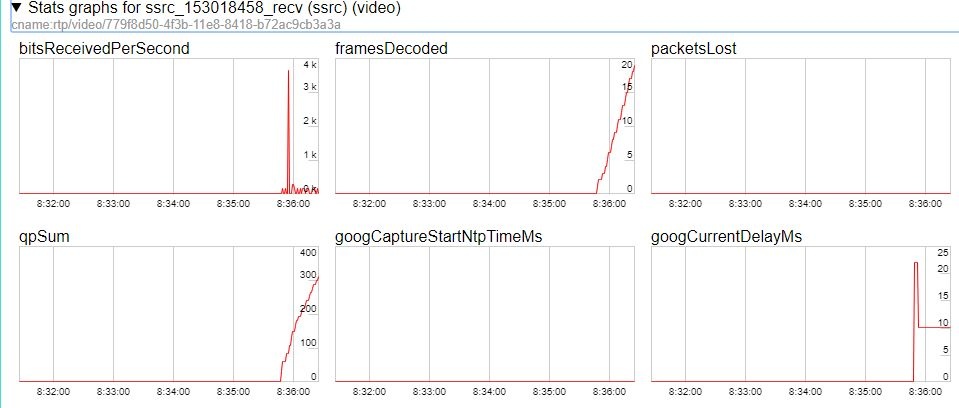

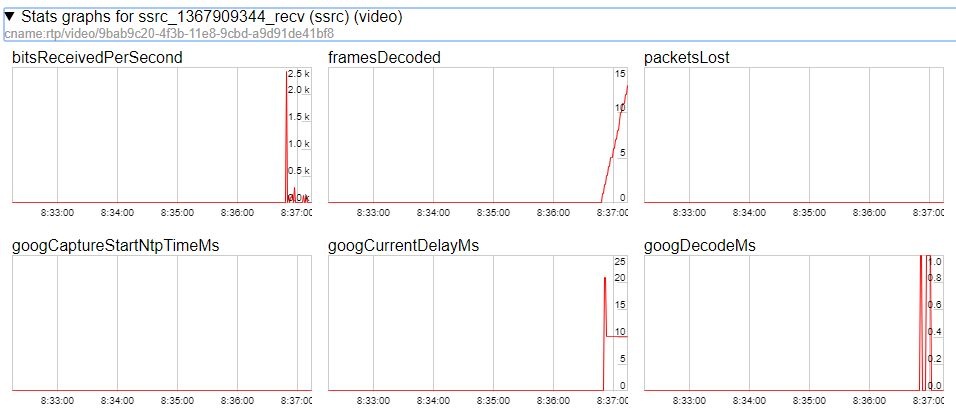

6. Playback diagrams in chrome://webrtc-internals

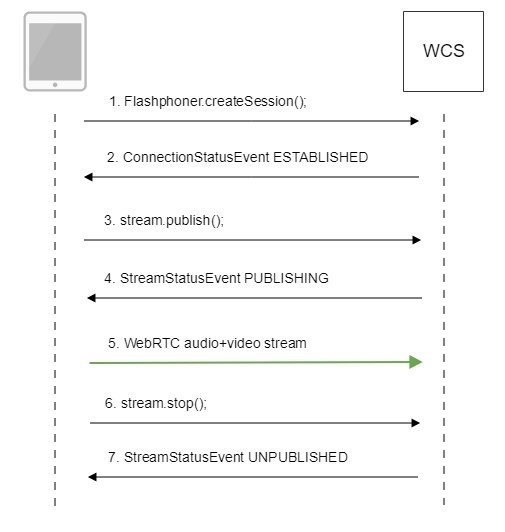

Call flow

Below is the call flow in the Media Devices example

1. Establishing a connection to the server.

Flashphoner.createSession(); code

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function(session){

//session connected, start streaming

startStreaming(session);

}).on(SESSION_STATUS.DISCONNECTED, function(){

setStatus(SESSION_STATUS.DISCONNECTED);

onStopped();

}).on(SESSION_STATUS.FAILED, function(){

setStatus(SESSION_STATUS.FAILED);

onStopped();

});

2. Receiving from the server an event confirming successful connection.

ConnectionStatusEvent ESTABLISHED code

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function(session){

//session connected, start streaming

startStreaming(session);

...

});

3. Configuring capturing from the HTML5 Canvas element

getConstraints(); code

if (constraints.video) {

if (constraints.customStream) {

constraints.customStream = canvas.captureStream(30);

constraints.video = false;

} else {

...

}

}

4. Publishing the stream.

stream.publish(); code

publishStream = session.createStream({

name: streamName,

display: localVideo,

cacheLocalResources: true,

constraints: constraints,

mediaConnectionConstraints: mediaConnectionConstraints

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

}).on(STREAM_STATUS.UNPUBLISHED, function () {

...

}).on(STREAM_STATUS.FAILED, function () {

...

});

publishStream.publish();

5. Receiving from the server an event confirming successful publishing of the stream.

StreamStatusEvent, статус PUBLISHING code

publishStream = session.createStream({

name: streamName,

display: localVideo,

cacheLocalResources: true,

constraints: constraints,

mediaConnectionConstraints: mediaConnectionConstraints

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

$("#testBtn").prop('disabled', true);

var video = document.getElementById(publishStream.id());

//resize local if resolution is available

if (video.videoWidth > 0 && video.videoHeight > 0) {

resizeLocalVideo({target: video});

}

enableMuteToggles(true);

if ($("#muteVideoToggle").is(":checked")) {

muteVideo();

}

if ($("#muteAudioToggle").is(":checked")) {

muteAudio();

}

//remove resize listener in case this video was cached earlier

video.removeEventListener('resize', resizeLocalVideo);

video.addEventListener('resize', resizeLocalVideo);

setStatus(STREAM_STATUS.PUBLISHING);

//play preview

var constraints = {

audio: $("#playAudio").is(':checked'),

video: $("#playVideo").is(':checked')

};

if (constraints.video) {

constraints.video = {

width: (!$("#receiveDefaultSize").is(":checked")) ? parseInt($('#receiveWidth').val()) : 0,

height: (!$("#receiveDefaultSize").is(":checked")) ? parseInt($('#receiveHeight').val()) : 0,

bitrate: (!$("#receiveDefaultBitrate").is(":checked")) ? $("#receiveBitrate").val() : 0,

quality: (!$("#receiveDefaultQuality").is(":checked")) ? $('#quality').val() : 0

};

}

previewStream = session.createStream({

name: streamName,

display: remoteVideo,

constraints: constraints

...

});

previewStream.play();

}).on(STREAM_STATUS.UNPUBLISHED, function () {

...

}).on(STREAM_STATUS.FAILED, function () {

...

});

publishStream.publish();

6. Sending the audio-video stream via WebRTC

7. Stopping publishing the stream.

stream.stop(); code

publishStream = session.createStream({

name: streamName,

display: localVideo,

cacheLocalResources: true,

constraints: constraints,

mediaConnectionConstraints: mediaConnectionConstraints

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

previewStream = session.createStream({

name: streamName,

display: remoteVideo,

constraints: constraints

}).on(STREAM_STATUS.PLAYING, function (previewStream) {

...

}).on(STREAM_STATUS.STOPPED, function () {

publishStream.stop();

}).on(STREAM_STATUS.FAILED, function () {

//preview failed, stop publishStream

if (publishStream.status() == STREAM_STATUS.PUBLISHING) {

setStatus(STREAM_STATUS.FAILED);

publishStream.stop();

}

});

previewStream.play();

}).on(STREAM_STATUS.UNPUBLISHED, function () {

...

}).on(STREAM_STATUS.FAILED, function () {

...

});

publishStream.publish();

8. Receiving from the server an event confirming successful unpublishing of the stream.

StreamStatusEvent, статус UNPUBLISHED code

publishStream = session.createStream({

name: streamName,

display: localVideo,

cacheLocalResources: true,

constraints: constraints,

mediaConnectionConstraints: mediaConnectionConstraints

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

}).on(STREAM_STATUS.UNPUBLISHED, function () {

setStatus(STREAM_STATUS.UNPUBLISHED);

//enable start button

onStopped();

}).on(STREAM_STATUS.FAILED, function () {

...

});

publishStream.publish();

To developer

Capability to capture video stream from an HTML5 Canvas element is available in WebSDK WCS starting from this version of JavaScript API. The source code of the example is located in examples/demo/streaming/media_devices_manager/.

You can use this capability to capture your own video stream rendered in the browser, for example:

var audioStream = new window.MediaStream();

var videoStream = videoElement.captureStream(30);

var audioTrack = videoStream.getAudioTracks()[0];

audioStream.addTrack(audioTrack);

publishStream = session.createStream({

name: streamName,

display: localVideo,

constraints: {

customStream: audioStream

},

});

publishStream.publish();

Capturing from a video-element works in Chrome:

constraints.customStream = videoElement.captureStream(30);

Capturing from a canvas-element works in Chrome 66, Firefox 59 and Mac OS Safari 11.1:

constraints.customStream = canvas.captureStream(30);

Known issues

1. Capturing from an HTML5 Video element does not work in Firefox and Safari.

Solution: use this capability only in the Chrome browser.

2. In the Media Devices when performing HTML5 Canvas capturing:

- in Firefox, the local video does not display what is rendered;

- in Chrome, the local video does not display black background.

Solution: take browser specific behavior into account during development.

3. If the web applicaiton is inside an iframe element, publishing of the video stream may fail.

Symptoms: IceServer errors in the browser console.

Solution: put the app out of iframe to an individual page.

4. If publishing of the stream goes under Windows 10 or Windows 8 and hardware acceleration is enabled in the Google Chrome browser, bitrate problems are possible.

Symptoms: low quality of the video, muddy picture, bitrate shown in chrome://webrtc-internals is less than 100 kbps.

Solution: turn off hardware acceleration in the browser, switch the browser of the server to use the VP8 codec.

3. Configuring capturing from the HTML5 Canvas element