Example of iOS application managing media devices

This example allows to publish WebRTC stream on Web Call Server and demonstrates selection of source camera and specification of the following parameters for published and played video

- resolution (width, height)

- bitrate

- FPS (Frames Per Second) - for published video

- quality - for played video

As well as publishing streams with audio and video, it allows to publish audio-only and video-only streams.

Audio and video can be muted when publishing is started (if corresponding controls has been set to ON before streaming was started), or while stream is being published.

Video streams can be played with or without video.

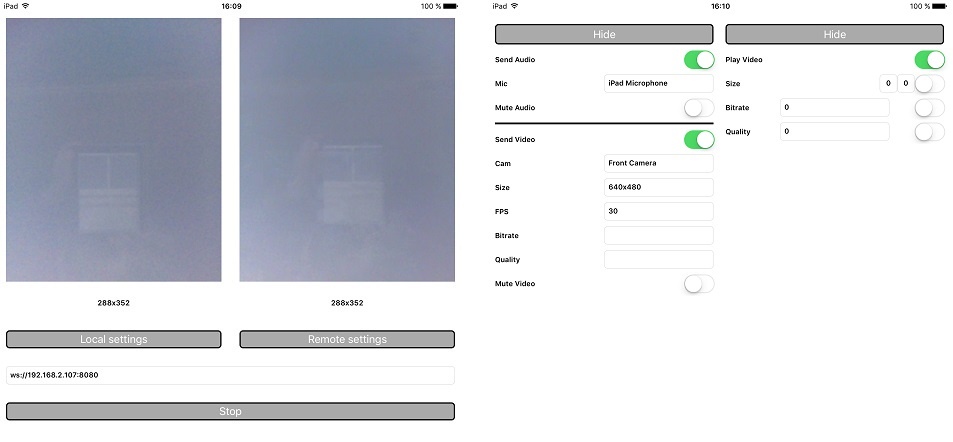

On the screenshot below the example is displayed when a stream is being published and played.

In the URL specified in the input field, 192.168.2.107 is the address of the WCS server.

Two videos are displayed

- left - video from the camera

- right - the published video stream is played from the server

View with controls for publishing settings is displayed when 'Local settings' button is tapped, and view with controls for playback settings - when 'Remote settings' button is tapped.

Work with code of the example

To analyze the code, let's take MediaDevices example version with hash 79a318b, which can be downloaded with corresponding build 2.2.2.

View classes

- class for the main view of the application: ViewController (header file ViewController.h; implementation file ViewController.m)

- class for view with publishing settings: WCSLocalVideoControlView (header file WCSLocalVideoControl.h; implementation file WCSLocalVideoControl.m)

- class for view with playback settings: WCSRemoteVideoControlView (header file WCSRemoteVideoControl.h; implementation file WCSRemoteVideoControl.m)

1. Import of API. ViewController.m, line 11

#import <FPWCSApi2/FPWCSApi2.h>

2. List available media devices.

List of available media devices (cameras and microphones) is requested using method getMediaDevices, which returns FPWCSApi2MediaDeviceList object. WCSLocalVideoControl.m, line 12

localDevices = [FPWCSApi2 getMediaDevices];

Default microphone and camera are selected

- microphone (WCSLocalVideoControl.m, line 25)

if (localDevices.audio.count > 0) {

_micSelector.input.text = ((FPWCSApi2MediaDevice *)(localDevices.audio[0])).label;

}

- camera (WCSLocalVideoControl.m, line 37)

if (localDevices.video.count > 0) {

_camSelector.input.text = ((FPWCSApi2MediaDevice *)(localDevices.video[0])).label;

}

3. Constraints for published stream. WCSLocalVideoControl.m, line 210

- New FPWCSApi2MediaConstraints object is created

FPWCSApi2MediaConstraints *ret = [[FPWCSApi2MediaConstraints alloc] init];

- Constraint for audio specifying if published stream should have audio is set to the 'Send Audio' toggle switch value

ret.audio = [_sendAudio.control isOn];

- If published stream will have video ('Send Video' toggle switch value is ON), video constraints are specified: source camera ID, width, height, FPS and bitrate

if ([_sendVideo.control isOn]) {

FPWCSApi2VideoConstraints *video = [[FPWCSApi2VideoConstraints alloc] init];

for (FPWCSApi2MediaDevice *device in localDevices.video) {

if ([device.label isEqualToString:_camSelector.input.text]) {

video.deviceID = device.deviceID;

}

}

video.minWidth = video.maxWidth = [_videoResolution.width.text integerValue];

video.minHeight = video.maxHeight = [_videoResolution.height.text integerValue];

video.minFrameRate = video.maxFrameRate = [_fpsSelector.input.text integerValue];

video.bitrate = [_bitrate.input.text integerValue];

video.quality = [_quality.input.text integerValue];

ret.video = video;

}

4. Constraints for played stream. WCSRemoteVideoControl.m, line 128

- New FPWCSApi2MediaConstraints object is created

FPWCSApi2MediaConstraints *ret = [[FPWCSApi2MediaConstraints alloc] init];

- Constraint for audio specifying that played stream should have audio is added

ret.audio = YES;

- If played stream will have video ('Play Video' toggle switch value is ON), video constraints are specified: width, height, bitrate and quality

if ([_playVideo.control isOn]) {

FPWCSApi2VideoConstraints *video = [[FPWCSApi2VideoConstraints alloc] init];

video.minWidth = video.maxWidth = [_videoResolution.width.text integerValue];

video.minHeight = video.maxHeight = [_videoResolution.height.text integerValue];

video.bitrate = [_bitrate.input.text integerValue];

video.quality = [_quality.input.text integerValue];

ret.video = video;

}

5. Mute/unmute audio and video.

The following methods are used to mute/unmute audio and video in published stream

- [_localStream muteAudio]; (line 238)

- [_localStream unmuteAudio]; (line 240)

- [_localStream muteVideo]; (line 246)

- [_localStream unmuteVideo]; (line 248)

6. Connection to server.

ViewController method start is called when Start button is tapped. ViewController.m, line 224

[self start];

In the method,

- object with options for connection session is created (ViewController.m, line 38)

FPWCSApi2SessionOptions *options = [[FPWCSApi2SessionOptions alloc] init]; options.urlServer = _urlInput.text; options.appKey = @"defaultApp";

The options include URL of WCS server and appKey of internal server-side application.

- new session is created with method createSession, which returns FPWCSApi2Session object (ViewController.m, line 42)

_session = [FPWCSApi2 createSession:options error:&error];

- callback functions for processing session statuses are added (ViewController.m, line 61)

[_session on:kFPWCSSessionStatusEstablished callback:^(FPWCSApi2Session *session){

[self startStreaming];

}];

[_session on:kFPWCSSessionStatusDisconnected callback:^(FPWCSApi2Session *session){

[self onStopped];

}];

[_session on:kFPWCSSessionStatusFailed callback:^(FPWCSApi2Session *session){

[self onStopped];

}];

If connection is successfully established, ViewController method startStreaming will be called to publish a new stream.

In case of disconnection, or connection failure, ViewController method onStopped will be called to make appropriate changes in controls of the interface.

- FPWCSApi2Session method connect is called to establish connection to server (ViewController.m, line 72)

[_session connect];

7. Stream publishing.

When connection to the server is established,

- object with stream publish options is created (ViewController.m, line 79)

FPWCSApi2StreamOptions *options = [[FPWCSApi2StreamOptions alloc] init]; options.name = [self getStreamName]; options.display = _videoView.local; options.constraints = [_localControl toMediaConstraints];

The options include stream name, view for displaying video and constraints for audio and video.

WCSLocalVideoControlView method toMediaConstraints is used to get the constraints.

- new stream is created with FPWCSApi2Session method createStream, which returns FPWCSApi2Stream object (ViewController.m, line 84)

_localStream = [_session createStream:options error:&error];

- callback functions for processing stream statuses are added (ViewController.m, line 109)

[_localStream on:kFPWCSStreamStatusPublishing callback:^(FPWCSApi2Stream *stream){

[self startPlaying];

}];

[_localStream on:kFPWCSStreamStatusUnpublished callback:^(FPWCSApi2Stream *stream){

[self onStopped];

}];

[_localStream on:kFPWCSStreamStatusFailed callback:^(FPWCSApi2Stream *stream){

[self onStopped];

}];

If stream is successfully published, ViewController method startPlaying will be called to play the stream.

In case of failure, or when stream is unpublished, ViewController method onStopped will be called to make appropriate changes in controls of the interface.

- FPWCSApi2Stream method publish is called to publish the stream (ViewController.m, line 120)

[_localStream publish:&error]

8. Stream playback.

When stream is published,

- object with stream playback options is created (ViewController.m, line 139)

FPWCSApi2StreamOptions *options = [[FPWCSApi2StreamOptions alloc] init]; options.name = [_localStream getName]; options.display = _videoView.remote; options.constraints = [_remoteControl toMediaConstraints];

The options include stream name, view for displaying video and constraints for audio and video.

WCSRemoteVideoControlView method toMediaConstraints is used to get the constraints.

- new stream is created with FPWCSApi2Session method createStream, which returns FPWCSApi2Stream object (ViewController.m, line 144)

_remoteStream = [_session createStream:options error:&error];

- callback functions for processing stream statuses are added (ViewController.m, line 162)

[_remoteStream on:kFPWCSStreamStatusPlaying callback:^(FPWCSApi2Stream *stream){

[self onStarted];

}];

[_remoteStream on:kFPWCSStreamStatusStopped callback:^(FPWCSApi2Stream *rStream){

[_localStream stop:nil];

}];

[_remoteStream on:kFPWCSStreamStatusFailed callback:^(FPWCSApi2Stream *rStream){

if (_localStream && [_localStream getStatus] == kFPWCSStreamStatusPublishing) {

[_localStream stop:nil];

}

}];

If playback is successfully started, ViewController method onStarted will be called to make appropriate changes in controls of the interface.

In case of failure, or when playback is stopped, FPWCSApi2Stream method stop will be called for the published stream to stop its publication.

[_localStream stop:nil];

- FPWCSApi2Stream method play is called to play the stream (ViewController.m, line 174)

[_remoteStream play:&error]

8. Disconnection. ViewController.m, line 36

FPWCSApi2Session method disconnect is called to close connection to the server.

[_session disconnect];