From an iOS mobile app via WebRTC¶

Overview¶

WCS provides SDK to develop client applications for the iOS platform

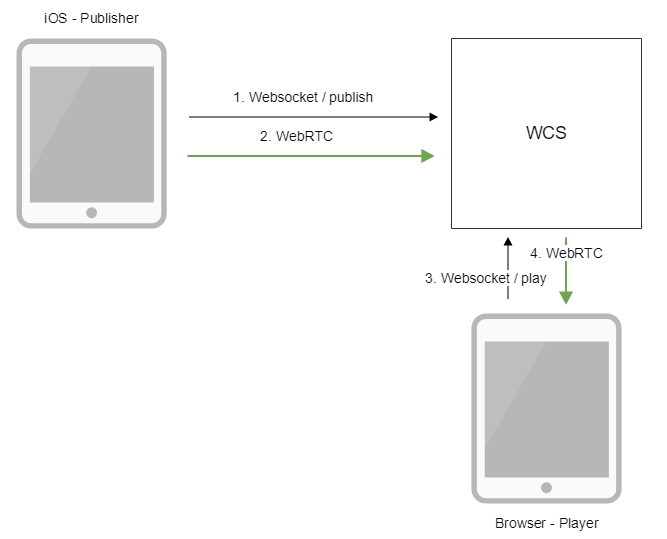

Operation flowchart¶

- The iOS device connects to the server via the Websocket protocol and sends the

publishStreamcommand. - The iOS device captures the microphone and the camera and sends the WebRTC stream to the server.

- The browser establishes a connection via WebSocket and sends the

playStreamcommand. - The browser receives the WebRTC stream and plays that stream on the page

Quick manual on testing¶

- For the test we use:

- the demo server at

wcs5-us.flashphoner.com; - the mobile application for iOS;

-

the Two Way Streaming web application to display the captured stream

-

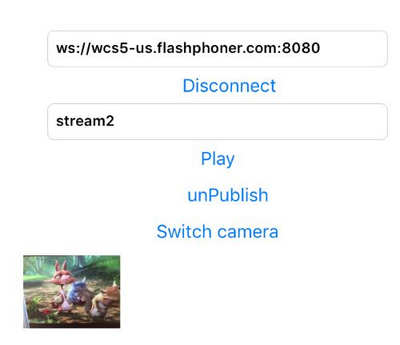

Start the application on iPhone. Enter the URL of the WCS server and the name of the stream. Publish the stream by clicking

Publish:

-

Open the Two Way Streaming web application. In the field just below the player window enter the name of the stream broadcast from iPhone, and click the

Playbutton. The browser starts playing the stream:

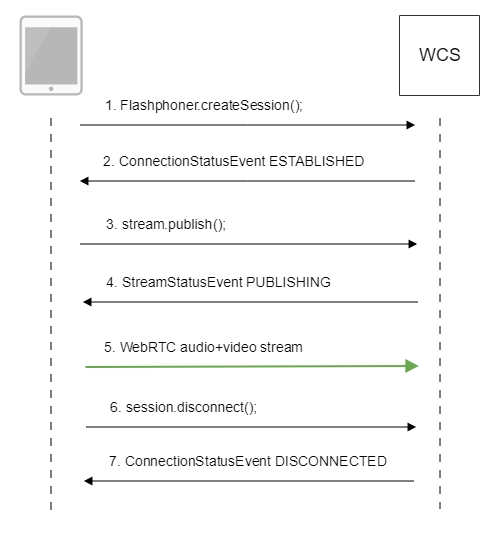

Call flow¶

Below is the call flow when using the Streamer example

-

Establishing a connection to the server

FPWCSApi2.createSession()code

FPWCSApi2SessionOptions *options = [[FPWCSApi2SessionOptions alloc] init]; NSURL *url =[[NSURL alloc] initWithString:_connectUrl.text]; options.urlServer = [NSString stringWithFormat:@"%@://%@:%@", url.scheme, url.host, url.port]; streamName = [url.path.stringByDeletingPathExtension stringByReplacingOccurrencesOfString: @"/" withString:@""]; options.appKey = @"defaultApp"; NSError *error; session = [FPWCSApi2 createSession:options error:&error]; -

Receiving from the server an event confirming successful connection

FPWCSApi2Session.on:kFPWCSSessionStatusEstablished callbackcode

-

Publishing the stream

FPWCSApi2Session.createStream()code

FPWCSApi2Session *session = [FPWCSApi2 getSessions][0]; FPWCSApi2StreamOptions *options = [[FPWCSApi2StreamOptions alloc] init]; options.name = streamName; options.display = _videoView.local; if ( UI_USER_INTERFACE_IDIOM() == UIUserInterfaceIdiomPad ) { options.constraints = [[FPWCSApi2MediaConstraints alloc] initWithAudio:YES videoWidth:640 videoHeight:480 videoFps:15]; } NSError *error; publishStream = [session createStream:options error:&error]; -

Receiving from the server an event confirming successful publishing of the stream

FPWCSApi2Stream.on:kFPWCSStreamStatusPublishing callbackcode

-

Sending audio and video stream via WebRTC

-

Stopping publishing of the stream

FPWCSApi2Session.disconnect()code

if ([button.titleLabel.text isEqualToString:@"STOP"]) { if ([FPWCSApi2 getSessions].count) { FPWCSApi2Session *session = [FPWCSApi2 getSessions][0]; NSLog(@"Disconnect session with server %@", [session getServerUrl]); [session disconnect]; } else { NSLog(@"Nothing to disconnect"); [self onDisconnected]; } } else { //todo check url is not empty [self changeViewState:_connectUrl enabled:NO]; [self connect]; } -

Receiving from the server an event confirming successful unpublishing of the stream

FPWCSApi2Sessionon:kFPWCSSessionStatusDisconnected callbackcode