In an Android mobile application via WebRTC¶

Overview¶

WCS provides SDK to develop client applications for the Android platform

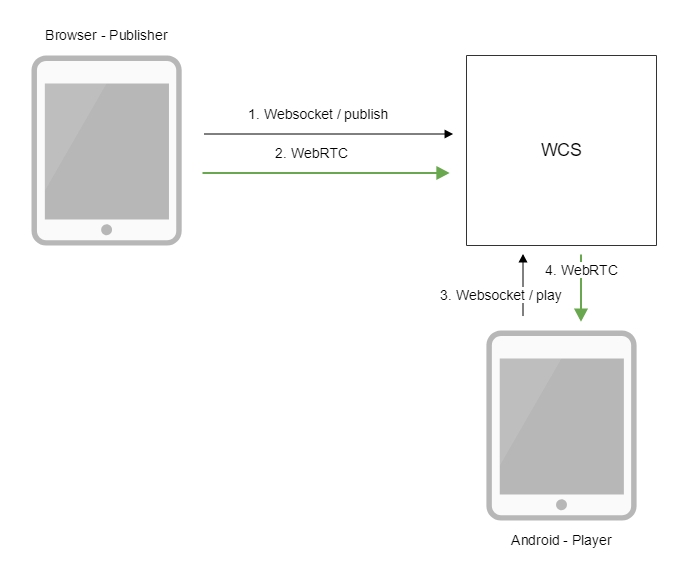

Operation flowchart¶

- The browser connects to the server via the Websocket protocol and sends the

publishStreamcommand. - The browser captures the microphone and the camera and sends the WebRTC stream to the server.

- The Android device connects to the server via the Websocket protocol and sends the

playStreamcommand. - The Android device receives the WebRTC stream from the server and plays it in the application.

Quick manual on testing¶

- For the test we use:

- the demo server at

demo.flashphoner.com; - the Two Way Streaming web application to publish the stream;

-

the Player mobile application (Google Play) to play the stream

-

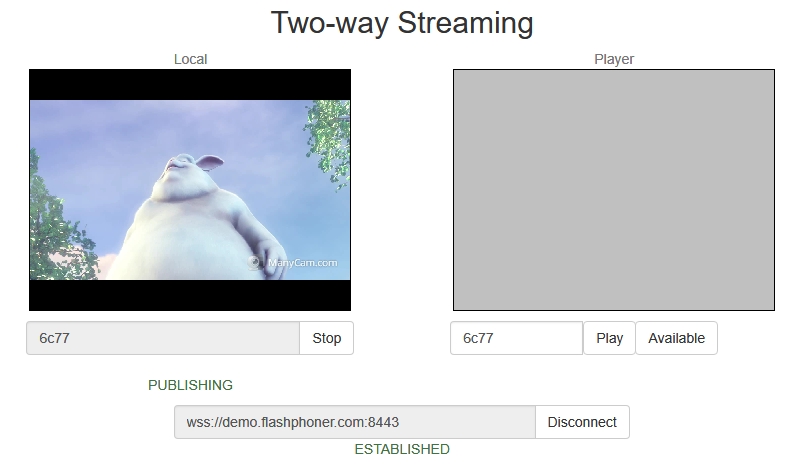

Open the Two Way Streaming web application. Click

Connect, thenPublish. Copy the identifier of the stream:

-

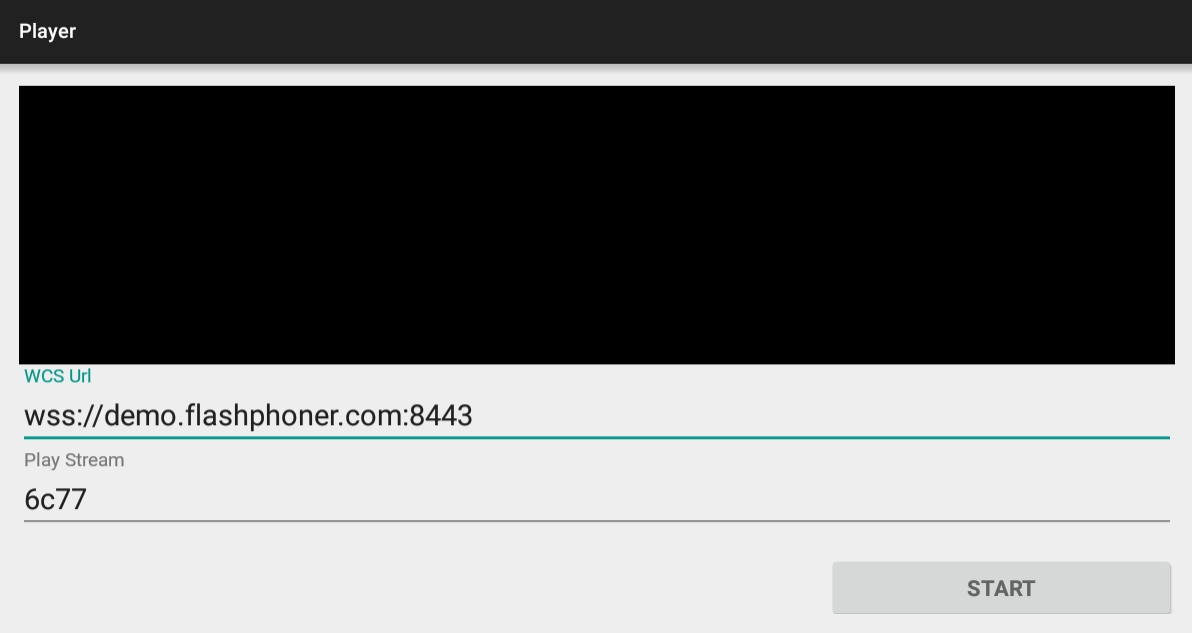

Install on the Android device the Player mobile app from Google Play. Start the app on the device, enter the address of the WCS server in the

WCS urlfield aswss://demo.flashphoner.com:8443, enter the identifier of the video stream in thePlay Streamfield:

-

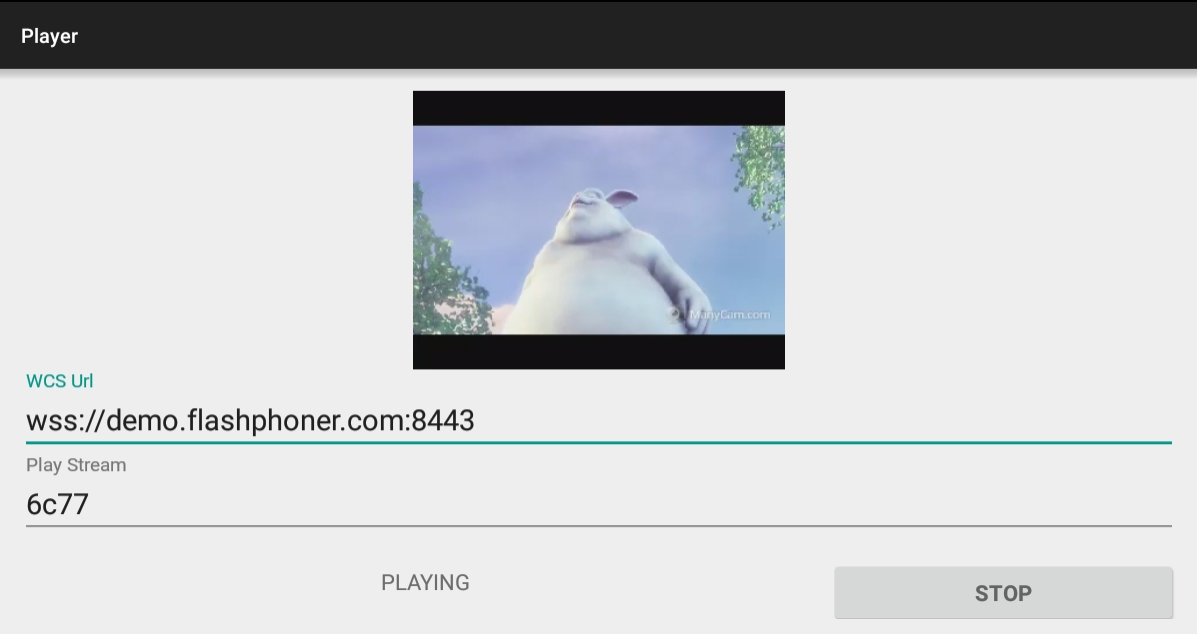

Click

Start. The video stream starts playing:

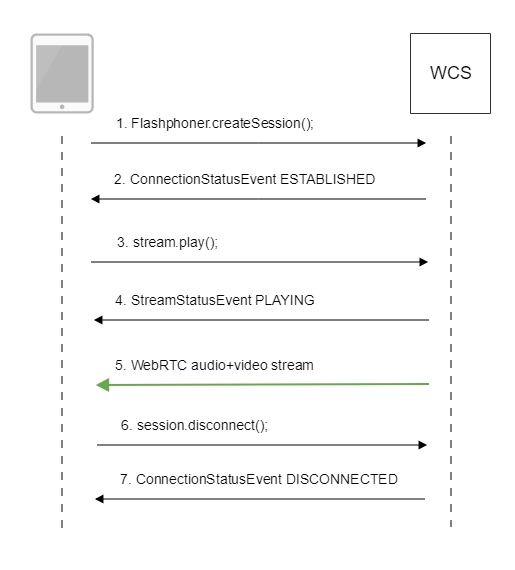

Call flow¶

Below is the call flow when using the Player example to play the stream.

-

Establishing a connection to the server

Flashphoner.createSession()code

/** * The options for connection session are set. * WCS server URL is passed when SessionOptions object is created. * SurfaceViewRenderer to be used to display the video stream is set with method SessionOptions.setRemoteRenderer(). */ SessionOptions sessionOptions = new SessionOptions(mWcsUrlView.getText().toString()); sessionOptions.setRemoteRenderer(remoteRender); /** * Session for connection to WCS server is created with method createSession(). */ session = Flashphoner.createSession(sessionOptions); -

Receiving from the server an event that confirms successful connection

Session.onConnected()code

@Override public void onConnected(final Connection connection) { runOnUiThread(new Runnable() { @Override public void run() { mStartButton.setText(R.string.action_stop); mStartButton.setTag(R.string.action_stop); mStartButton.setEnabled(true); mStatusView.setText(connection.getStatus()); /** * The options for the stream to play are set. * The stream name is passed when StreamOptions object is created. */ StreamOptions streamOptions = new StreamOptions(mPlayStreamView.getText().toString()); /** * Stream is created with method Session.createStream(). */ playStream = session.createStream(streamOptions); ... } ... }); ... } -

Playing the stream

Stream.play()code

-

Receiving from the server an event confirming successful playing of the stream

StreamStatus.PLAYINGcode

/** * Callback function for stream status change is added to display the status. */ playStream.on(new StreamStatusEvent() { @Override public void onStreamStatus(final Stream stream, final StreamStatus streamStatus) { runOnUiThread(new Runnable() { @Override public void run() { if (!StreamStatus.PLAYING.equals(streamStatus)) { Log.e(TAG, "Can not play stream " + stream.getName() + " " + streamStatus); mStatusView.setText(streamStatus.toString()); } else if (StreamStatus.NOT_ENOUGH_BANDWIDTH.equals(streamStatus)) { Log.w(TAG, "Not enough bandwidth stream " + stream.getName() + ", consider using lower video resolution or bitrate. " + "Bandwidth " + (Math.round(stream.getNetworkBandwidth() / 1000)) + " " + "bitrate " + (Math.round(stream.getRemoteBitrate() / 1000))); } else { mStatusView.setText(streamStatus.toString()); } } }); } }); -

Receiving the audio-video stream via WebRTC

-

Stopping the playback of the stream

Session.disconnect()code

-

Receiving from the server an event confirming the playback of the stream is stopped

Session.onDisconnection()code@Override public void onDisconnection(final Connection connection) { runOnUiThread(new Runnable() { @Override public void run() { mStartButton.setText(R.string.action_start); mStartButton.setTag(R.string.action_start); mStartButton.setEnabled(true); mStatusView.setText(connection.getStatus()); } }); }