From a web camera in a browser via WebRTC¶

Overview¶

Supported platforms and browsers¶

| Chrome | Firefox | Safari | Edge | |

|---|---|---|---|---|

| Windows | ✅ | ✅ | ❌ | ✅ |

| Mac OS | ✅ | ✅ | ✅ | ✅ |

| Android | ✅ | ✅ | ❌ | ✅ |

| iOS | ✅ | ✅ | ✅ | ✅ |

Supported codecs¶

- Video: H.264, VP8

- Audio: Opus, PCMA, PCMU, G722, G729

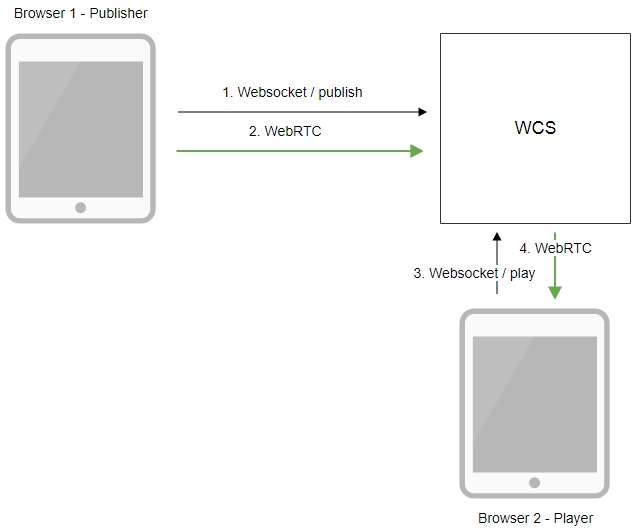

Operation flowchart¶

- The browser connects to the server via the Websocket protocol and sends the

publishStreamcommand. - The browser captures the microphone and the camera and sends a WebRTC stream to the server.

- The second browser establishes a connection also via Websocket and sends the

playStreamcommand. - The second browser receives the WebRTC stream and plays that stream on the page.

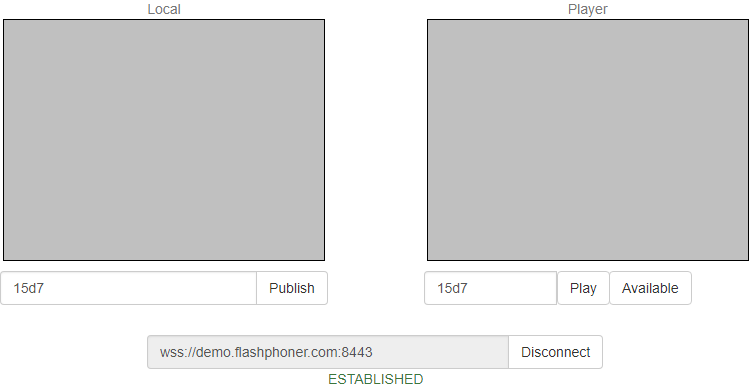

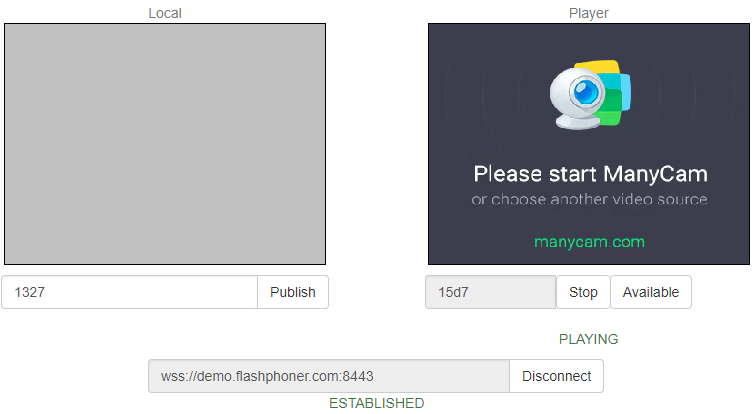

Quick manual on testing¶

-

For this test we use the demo server at

demo.flashphoner.comand the Two Way Streaming web applicationhttps://demo.flashphoner.com/client2/examples/demo/streaming/two_way_streaming/two_way_streaming.html -

Establish a connection with the server by clicking the

Connectbutton

-

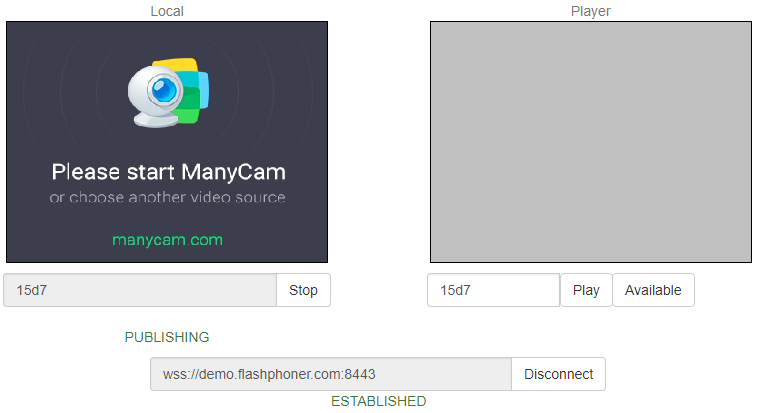

Click

Publish. The browser captures the camera and sends the stream to the server

-

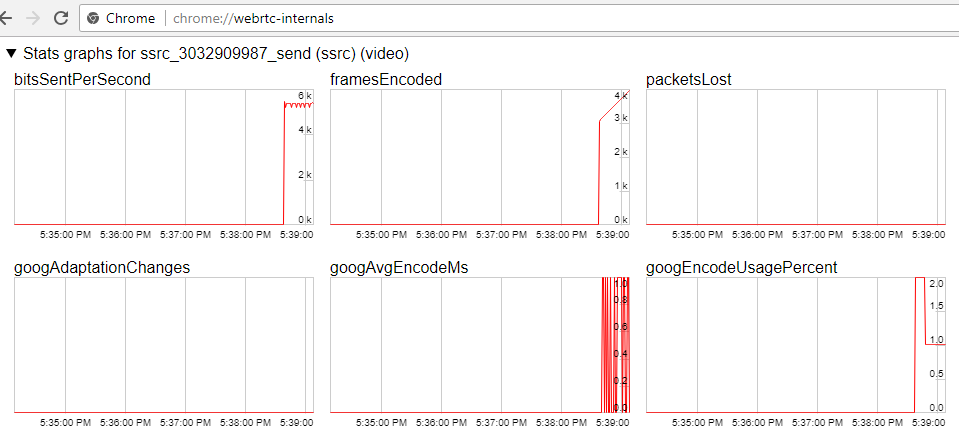

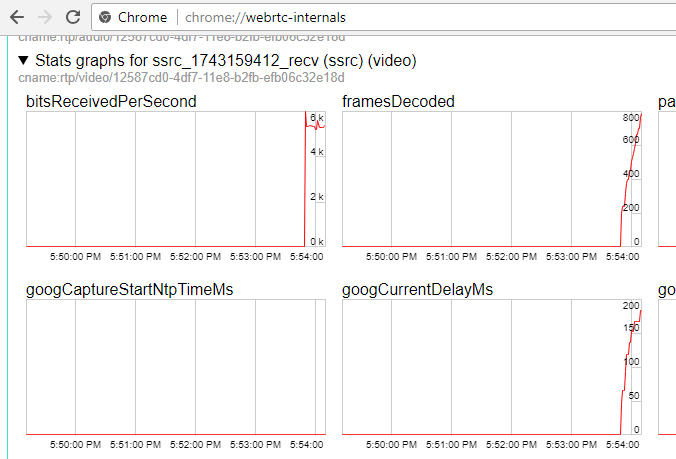

Make sure the stream us sent to the server and the system operates normally by opening

chrome://webrtc-internals

-

Open the Two Way Streaming app in a new window, click

Connectand specify the stream ID, then clickPlay

-

Playback diagrams in

chrome://webrtc-internals

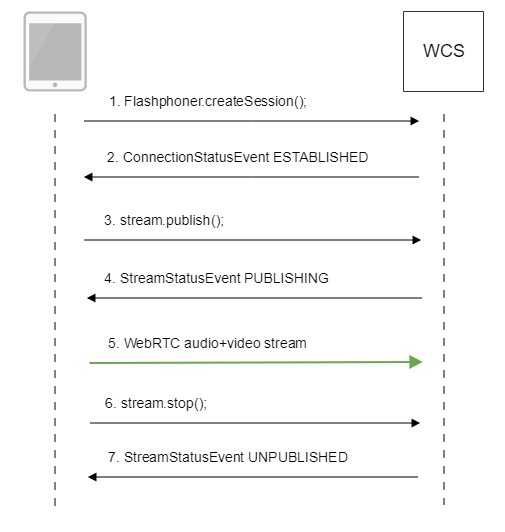

Call flow¶

Below is the call flow based on the Two Way Streaming example

-

Establishing connection to the server

Flashphoner.createSession()code

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function (session) { setStatus("#connectStatus", session.status()); onConnected(session); }).on(SESSION_STATUS.DISCONNECTED, function () { setStatus("#connectStatus", SESSION_STATUS.DISCONNECTED); onDisconnected(); }).on(SESSION_STATUS.FAILED, function () { setStatus("#connectStatus", SESSION_STATUS.FAILED); onDisconnected(); }); -

Receiving from the server the successful connection status

SESSION_STATUS.ESTABLISHEDcode

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function (session) { setStatus("#connectStatus", session.status()); onConnected(session); }).on(SESSION_STATUS.DISCONNECTED, function () { setStatus("#connectStatus", SESSION_STATUS.DISCONNECTED); onDisconnected(); }).on(SESSION_STATUS.FAILED, function () { setStatus("#connectStatus", SESSION_STATUS.FAILED); onDisconnected(); }); -

Publishing the stream

Stream.publish()code

session.createStream({ name: streamName, display: localVideo, cacheLocalResources: true, receiveVideo: false, receiveAudio: false }).on(STREAM_STATUS.PUBLISHING, function (stream) { setStatus("#publishStatus", STREAM_STATUS.PUBLISHING); onPublishing(stream); }).on(STREAM_STATUS.UNPUBLISHED, function () { setStatus("#publishStatus", STREAM_STATUS.UNPUBLISHED); onUnpublished(); }).on(STREAM_STATUS.FAILED, function () { setStatus("#publishStatus", STREAM_STATUS.FAILED); onUnpublished(); }).publish(); -

Receiving from the server the successful publishing status

STREAM_STATUS.PUBLISHINGcode

-

Sending audio-video stream via WebRTC

-

Stopping publishing the stream

Stream.stop()code

-

Receiving from the server an even confirming successful unpublishing

STREAM_STATUS.UNPUBLISHEDcode

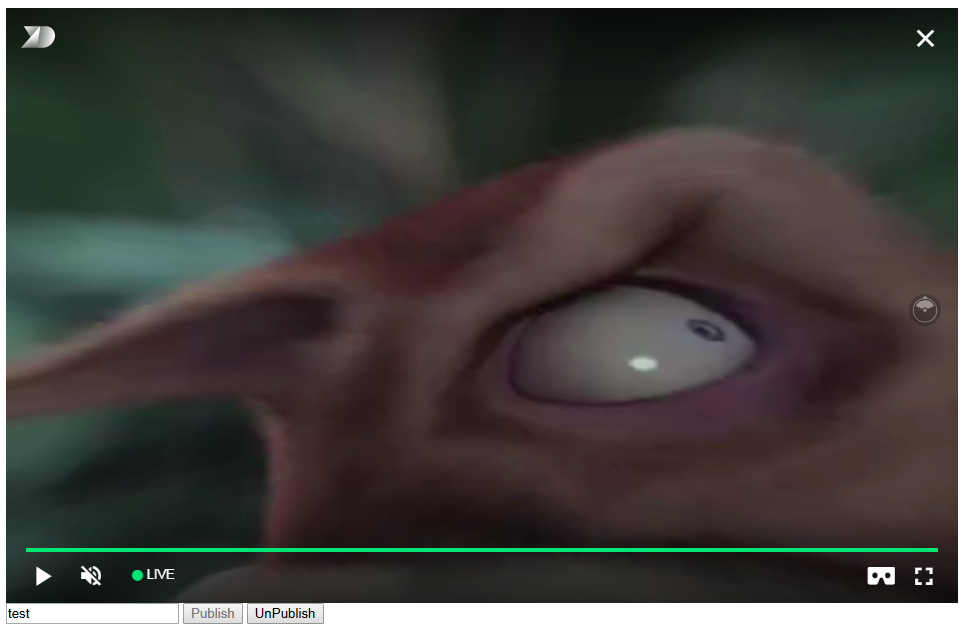

Stream publishing with local video playback in VR Player¶

When stream is captured from webcamera it is possible to play a local video in a browser-based VR player, Delight Player for example. This way stream can be played in virtual and mixed reality devices if one of browsers supports work on this device. JavaScript and HTML5 features are used to integrate a custom player.

Testing¶

-

For test we use:

- WCS server

- test page with Delight VR player to play stream while publishing

-

Set stream name

testand pressPublish. Stream published is played in Delight player

Player page example code¶

-

Declaration of video element to play the stream, stream name input field and

Publish/Unpublishbuttons

<div style="width: 50%;"> <dl8-live-video id="remoteVideo" format="STEREO_TERPON" muted="true"> <source> </dl8-live-video> </div> <input class="form-control" type="text" id="streamName" placeholder="Stream Name"> <button id="publishBtn" type="button" class="btn btn-default" disabled>Publish</button> <button id="unpublishBtn" type="button" class="btn btn-default" disabled>UnPublish</button> -

Player readiness event handling

-

Creating mock elements to play a stream

-

Establishing connection to the server and stream creation

var video = dl8video.contentElement; Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function (session) { var session = Flashphoner.getSessions()[0]; session.createStream({ name: $('#streamName').val(), display: mockLocalDisplay.get(0) }).on(STREAM_STATUS.PUBLISHING, function (stream) { ... }).publish(); }) -

Publishing stream, playback start in VR player and

Unpublishbutton handling

session.createStream({ ... }).on(STREAM_STATUS.PUBLISHING, function (stream) { var srcObject = mockLocalVideo.get(0).srcObject; video.srcObject = srcObject; dl8video.start(); mockLocalVideo.get(0).pause(); mockLocalVideo.get(0).srcObject = null; $('#unpublishBtn').prop('disabled', false).click(function() { stream.stop(); $('#publishBtn').prop('disabled', false); $('#unpublishBtn').prop('disabled', true); dl8video.exit(); }); }).publish();

Full source code of the sample VR player page

<!DOCTYPE html>

<html>

<head>

<title>WebRTC Delight</title>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<script type="text/javascript" src="../../../../flashphoner.js"></script>

<script type="text/javascript" src="../../dependencies/jquery/jquery-1.12.0.js"></script>

<script type="text/javascript" src="../../dependencies/js/utils.js"></script>

<script src="dl8-66b250447635476d123a44a391c80b09887e831e.js" async></script>

<meta name="dl8-custom-format" content='{"name": "STEREO_TERPON","base":"STEREO_MESH","params":{"uri": "03198702.json"}}'>

</head>

<body>

<div style="width: 50%;">

<dl8-live-video id="remoteVideo" format="STEREO_TERPON" muted="true">

<source>

</dl8-live-video>

</div>

<input class="form-control" type="text" id="streamName" placeholder="Stream Name">

<button id="publishBtn" type="button" class="btn btn-default" disabled>Publish</button>

<button id="unpublishBtn" type="button" class="btn btn-default" disabled>UnPublish</button>

<script>

Flashphoner.init({flashMediaProviderSwfLocation: '../../../../media-provider.swf'});

var SESSION_STATUS = Flashphoner.constants.SESSION_STATUS;

var STREAM_STATUS = Flashphoner.constants.STREAM_STATUS;

var STREAM_STATUS_INFO = Flashphoner.constants.STREAM_STATUS_INFO;

var publishBtn = $('#publishBtn').get(0);

var dl8video = null;

var url = setURL();

document.addEventListener('x-dl8-evt-ready', function () {

dl8video = $('#remoteVideo').get(0);

$('#publishBtn').prop('disabled', false).click(function() {

publishStream();

});

});

var mockLocalDisplay = $('<div></div>');

var mockLocalVideo = $('<video></video>',{id:'mock-LOCAL_CACHED_VIDEO'});

mockLocalDisplay.append(mockLocalVideo);

function publishStream() {

$('#publishBtn').prop('disabled', true);

$('#unpublishBtn').prop('disabled', false);

var video = dl8video.contentElement;

Flashphoner.createSession({urlServer: url}).on(SESSION_STATUS.ESTABLISHED, function (session) {

var session = Flashphoner.getSessions()[0];

session.createStream({

name: $('#streamName').val(),

display: mockLocalDisplay.get(0)

}).on(STREAM_STATUS.PUBLISHING, function (stream) {

var srcObject = mockLocalVideo.get(0).srcObject;

video.srcObject = srcObject;

dl8video.start();

mockLocalVideo.get(0).pause();

mockLocalVideo.get(0).srcObject = null;

$('#unpublishBtn').prop('disabled', false).click(function() {

stream.stop();

$('#publishBtn').prop('disabled', false);

$('#unpublishBtn').prop('disabled', true);

dl8video.exit();

});

}).publish();

})

}

</script>

</body>

</html>

Audio and video tracks activity checking¶

Before build 5.2.533 media traffic presence in a stream can be controlled by the following parameter in flashphoner.properties

By default, RTP activity checking is enabled. If no media packets from browser in 60 seconds, publisher connection will be closed with the message to log Failed by RTP activity.

Since build 5.2.533, video and audio RTP activity checking settings are split

Activity timeout is set by the following parameter

Therefore, if video only streams are published to the server, audio RTP activity checking should be disabled

If audio only streams are published to the server, video RTP activity checking should be disabled

RTP activity can be checked for publishing streams only, not for playing streams.

Disable tracks activity checking by stream name¶

Since build 5.2.1784 it is possible to disable video and audio tracks activity checking for the streams with names matching a regular expression

The feature may be useful for streams in which a media traffic can stop for a long time, for example, screen sharing streams from an application window

In this case tracks activity checking will not be applied to the tracks named like conference-123-user-456-screen

If Chrome browser sends empty video due to web camera conflict¶

Some Chrome versions does not return an error if web camera is busy, but publish a stream with empty video (black screen). In this case, stream publishing can be stopped by two ways: using JavaScript and HTML5 on client, or using server settings.

Stopping a stream with empty video on client side¶

Videotrack that Chrome browsers creates for busy web camera, stops after no more than one second publishing, then stream is send without a videotrack. In this case videotrack state (readyState variable) changes to ended, and corresponding onended event is generated that can be catched by web application. To use this event:

-

Add the registartion function for

onendedevent handler to web application script, in which stream publishing is stopped withStream.stop()

function addVideoTrackEndedListener(localVideo, stream) { var videoTrack = extractVideoTrack(localVideo); if (videoTrack && videoTrack.readyState == 'ended') { console.error("Video source error. Disconnect..."); stream.stop(); } else if (videoTrack) { videoTrack.onended = function (event) { console.error("Video source error. Disconnect..."); stream.stop(); }; } } -

Add function to remove event handler when stream is stopped

-

Add function to extract videotrack

-

Register event handler when publishing a stream

-

Remove event handler when stopping a stream

Videotrack activity checking on server side¶

Videotrack activity checking for streams published on server is enabled by default with the following parameter in flashphoner.properties file

In this case, if there is no video in stream, its publishing will be stopped after 60 seconds.

Video only stream publishing with constraints¶

In some cases, video only stream should be published while microphone is busy, for example video is published while voice phone call. To prevent browser access request to microphone, set the constraints for video only publishing:

session.createStream({

name: streamName,

display: localVideo,

constraints: {video: true, audio: false}

...

}).publish();

Audio only stream publishing¶

In most cases, it is enough to set the constraints to publish audio only stream:

session.createStream({

name: streamName,

display: localVideo,

constraints: {video: false, audio: true}

...

}).publish();

Audio only stream publishing in Safari browser¶

When audio only stream is published from iOS Safari browser with constraints, browser does not send audio packets. To workaround this, a stream should be published with video, then video should be muted:

session.createStream({

name: streamName,

display: localVideo,

constraints: {video: true, audio: true}

...

}).on(STREAM_STATUS.PUBLISHING, function (stream) {

stream.muteVideo();

...

}).publish();

In this case, iOS Safari browser will send emply video packets (blank screen) and audio packets.

Disable resolution constraints normalization in Safari browser¶

By default, WebSDK normalizes stream publishing resolution constraints set in Safari browser. In this case, if width or height is not set, or equal to 0, then the picture resolution is forced to 320x240 or 640x480. Since WebSDK build 0.5.28.2753.109, it is possible to disable normalization and pass resolution constraints to the browser as is, for example:

publishStream = session.createStream({

...

disableConstraintsNormalization: true,

constraints {

video: {

width: {ideal: 1024},

height: {ideal: 768}

},

audio: true

}

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

});

publishStream.publish();

H264 encoding profiles exclusion¶

Since build 5.2.620 some H264 encoding profiles can be excluded from remote SDP which is sent by server to browser. The profiles to exclude should be listed in the following parameter

In this case, Main (4d) and High (64) profiles will be excluded, but Baseline (42) still remains:

a=rtpmap:102 H264/90000

a=fmtp:102 level-asymmetry-allowed=1;packetization-mode=1;profile-level-id=42001f

a=rtpmap:125 H264/90000

a=fmtp:125 level-asymmetry-allowed=1;packetization-mode=1;profile-level-id=42e01f

a=rtpmap:127 H264/90000

a=fmtp:127 level-asymmetry-allowed=1;packetization-mode=0;profile-level-id=42001f

a=rtpmap:108 H264/90000

a=fmtp:108 level-asymmetry-allowed=1;packetization-mode=0;profile-level-id=42e01f

This setting may be useful if some browser encodes B-frames for example using high profiles while hardware acceleration is enabled.

By default, no profiles will be excluded from SDP if they are supported by browser

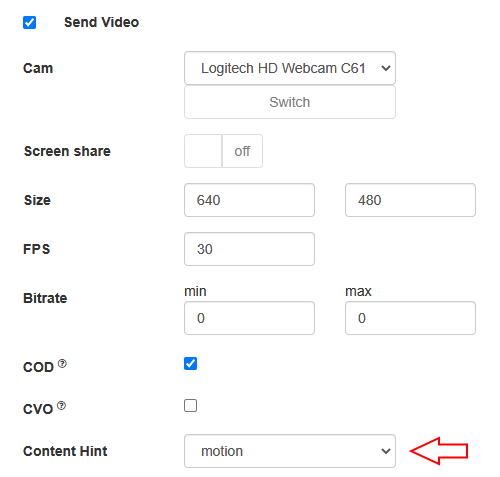

Content type management while publishing from Chromium based browser¶

In some cases, most browsers based on Chromium 91 agressively assess a publishing channel quality and drop publishing resolution lower than set in constraints, even if channel is enough to publish 720p or 1080p stream. To workaround this behaviour, since WebSDK build 2.0.180 videoContentHint option was added:

session.createStream({

name: streamName,

display: localVideo,

cacheLocalResources: true,

receiveVideo: false,

receiveAudio: false,

videoContentHint: "detail"

...

}).publish();

In WebSDK builds before 2.0.242 this option is set to detail by default and forces browsers to keep the publishing resolution as set in constraints. However, browser can drop FPS in this case when publishing stream from som USB web cameras. If FPS should be kept mo matter to resolution, the option should be set to motion

session.createStream({

name: streamName,

display: localVideo,

cacheLocalResources: true,

receiveVideo: false,

receiveAudio: false,

videoContentHint: "motion"

...

}).publish();

Since WebSDK build 2.0.242, videoContentHint is set to motion by default. The detail or text values should be set only for screen sharing streaming in browser.

Since WebSDK build 2.0.204 videoContentHint selection is available in Media Device example

FPS management in Firefox browser¶

By default, Firefox is publishing video with maximum FPS shown by web camera driver fo requested resolution. This value is 30 FPS for most of modern web cameras. Publishing FPS can be defined more strictly if necessary. To do this, disable constraints normalization:

session.createStream({

...

disableConstraintsNormalization: true,

constraints: {

video: {

width: 640,

height: 360,

frameRate: { max: 15 }

},

audio: true

}

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

}).publish();

Note that Firefox can exclude the camera from the list while requesting camera and microphone access if camera driver does not provide a required combination of resolution and FPS. Also, Firefox can change a publishing resolution if there is only one resolution with required FPS in camera driver response.

Stereo audio publishing in browser¶

Audio bitrate should be more than 60000 bps to publish stereo in Opus codec from browser. This can be done by setting Opus codec parameters on client side

session.createStream({

name: streamName,

display: remoteVideo,

constraints: {

audio: {

bitrate: 64000

},

...

}

...

}).publish();

or on server side

In this case, Firefox browser publishes stereo audio without additional setup.

Stereo audio publishing in Chrome based browsers¶

A certain client setup is required to publish stereo audio from Chrome. There are two ways to set this up depending on client implementation

Using Web SDK¶

If Web SDK is used in project, it is necessary to set the following constraint option:

session.createStream({

name: streamName,

display: localVideo,

constraints: {

audio: {

stereo: true

},

...

}

...

}).publish();

Using Websocket API¶

If only Raw Websocket API is used in project, it is necessary to disable echo cancellation

var constraints = {

audio: {

echoCancellation: false,

googEchoCancellation: false

},

...

};

...

navigator.getUserMedia(constraints, function (stream) {

...

}, reject);

If echo cancellation is enabled, Chrome will publish mono audio even if stereo is set in Opus codec options.

How to bypass an encrypted UDP traffic blocking¶

Sometimes an encrypted UDP mediatraffic may be blocked by ISP. In this case, WebRTC stream publishing over UDP will fail with Failed by RTP activity error or with Failed by ICE timeout. To bypass this, it is recommended to use TCP transport at client side

Another option is to use external or internal TURN server or publish a stream via RTMP or RTSP.

Redundancy support while publishing audio¶

Since build 5.2.1969 a redundancy is supported while publishing audio data (RED, RFC2198). This allows to reduce audio packet loss when using opus codec. The feature may be enabled with the following parameter

The red codec should be set before opus codec. In this case a browser supporting RED will send a redundancy data in audio packets. Note that audio publishing bitrate will be raised.

RED should be excluded for the cases when it cannot be applied:

codecs_exclude_sip=red,mpeg4-generic,flv,mpv

codecs_exclude_sip_rtmp=red,opus,g729,g722,mpeg4-generic,vp8,mpv

Getting an additional information about publisher configuration¶

Since WebSDK 2.0.246 build shipped with WCS build 5.2.2015 and later an additional information about publisher configuration will be sent to server. The data may help to debug and solve a stream publishing issues.

The data are sent in REST hooks.

System and browser information¶

System and browser information is sent in /connect REST hook

{

"nodeId" : "VuQnlozpitGdVKzoIZg3f2JmJdMldzPV@192.168.0.40",

"appKey" : "defaultApp",

"sessionId" : "/192.168.0.231:11641/192.168.0.40:8443-b7efee23-45ac-4591-9aec-37f896ef10f1",

...,

"clientInfo" : {

"architecture" : "x86",

"bitness" : "64",

"brands" : [ {

"brand" : "Not/A)Brand",

"version" : "8"

}, {

"brand" : "Chromium",

"version" : "126"

}, {

"brand" : "Google Chrome",

"version" : "126"

} ],

"fullVersionList" : [ {

"brand" : "Not/A)Brand",

"version" : "8.0.0.0"

}, {

"brand" : "Chromium",

"version" : "126.0.6478.127"

}, {

"brand" : "Google Chrome",

"version" : "126.0.6478.127"

} ],

"mobile" : false,

"model" : "",

"platform" : "Windows",

"platformVersion" : "10.0.0"

}

}

Attention

An architecture, OS version and full browser build number are available only in Chromium based browsers

System and browser information is available at server side in response to /connection/find and /connection/find_all REST queries.

Publisher media devices information¶

Publisher media devices information is sent in /publishStream REST hook

{

"nodeId" : "d2hxbqNPE04vGeZ51NPhDuId6k3hUrBB@192.168.0.39",

"appKey" : "defaultApp",

"sessionId" : "/192.168.0.83:42172/192.168.0.39:8443-325c8258-81a6-43de-8c74-1bf582fb436a",

"mediaSessionId" : "bb237f90-39be-11ef-81b8-bda3bf19742b",

"name" : "test",

"published" : true,

...,

"localMediaInfo" : {

"devices" : {

"video" : [ {

"id" : "bc18c5c2510d338d7b2c26bce4e37967feea3172f54ba2077558775d51839419",

"label" : "HD Webcam C615 (046d:082c)",

"type" : "camera"

}, {

"id" : "1f78289ccdbf27d67d605a35d6288acbdefe257275d4b5403525fb5cb1e1822e",

"label" : "HP HD Camera (0408:5347)",

"type" : "camera"

} ],

"audio" : [ {

"id" : "de988681c02901db0bfe012cd393eb2db5245fc2fb34709a26686ae6ca85b3ba",

"label" : "HD Webcam C615 Mono",

"type" : "mic"

}, {

"id" : "default",

"label" : "Default",

"type" : "mic"

}, {

"id" : "e7f4beddb0d71ea1f618cf3bab0f7e94053b622ddaf312c403824caa006f5889",

"label" : "Quantum 600 Mono",

"type" : "mic"

}, {

"id" : "fc6ac664dec546102d3f83b7fb5981afe3f0e88f8b76ffbe660ecfdd989bcf96",

"label" : "Family 17h (Models 10h-1fh) HD Audio Controller Analog Stereo",

"type" : "mic"

} ]

},

"tracks" : {

"video" : [ {

"trackId" : "1922f1d0-3a3f-4fa5-bd6e-e91ac84c666c",

"device" : "HD Webcam C615 (046d:082c)"

} ],

"audio" : [ {

"trackId" : "44187ea1-b756-4feb-80f0-ac57934c2200",

"device" : "MediaStreamAudioDestinationNode"

} ]

}

}

}

Where:

devices- a media devices list available to the browsertracks- what devices audio and video tracks of the stream published are capturing from

Publisher media devices information is available at server side in response to /stream/find and /stream/find_all REST queries.

Known issues¶

1. If the web app is inside an iframe element, publishing of the video stream may fail.¶

Symptoms

IceServer errors in the browser console

Solution

Put the app out of iframe to an individual page

2. Bitrate problems are possible when publishing a stream under Windows 10 or Windows 8 with hardware acceleration enabled in a browser¶

Symptoms

Low quality of the video, muddy picture, bitrate shown in chrome://webrtc-internals is less than 100 kbps.

Solution

Turn off hardware acceleration in the browser or use the VP8 codec to publish

3. Stream publishing with local video playback in VR Player does not work in legacy MS Edge¶

Symptoms

When stream is published in legacy MS Edge, local video playback does not start in VR Player

Solution

Use another browser to publish a stream or update legacy MS Edge to Chromium Edge

4. In some cases microphone does not work in Chrome browser while publishing WebRTC stream¶

Symptoms

Michrophone does not work while publishing WebRTC stream, including example web applications out of the box

Solution

Turn off gain node creation in Chrome browser using WebSDK initialization parameter createMicGainNode: false

Flashphoner.init({

flashMediaProviderSwfLocation: '../../../../media-provider.swf',

createMicGainNode: false

});

Note that microphone gain setting will not work in this case.

5. G722 codec does not work in Edge browser¶

Symptoms

WebRTC stream with G722 audio does not publish in Edge browser

Solution

Use another codec or another browser. If Edge browser must be used, exclude G722 with the following parameter

6. Some Chromium based browsers do not support H264 codec depending on browser and OS version¶

Symptoms

Stream publishing does not work, stream playback works partly (audio only) or does not work at all

Solution

Enable VP8 on server side

exclude H264 for publishing or playing on client side

publishStream = session.createStream({

...

stripCodecs: "h264,H264"

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

});

publishStream.publish();

Note that stream transcoding is enabled on server when stream published as H264 is played as VP8 and vice versa.

7. iOS Safari 12.1 does not send video frames when picture with certain resolution is published¶

Symptoms

When H264 stream is published from iOS Safari 12.1, subscriber receives audio packets only, publishers WebRTC statistics also shows audio frames only

Solution

Enable VP8 on server side

exclude H264 for publishing or playing on client side

publishStream = session.createStream({

...

stripCodecs: "h264,H264"

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

});

publishStream.publish();

Note that stream transcoding is enabled on server when stream published as H264 is played as VP8 and vice versa.

8. Stream from built-in camera cannot be published in iOS Safari 12 and MacOS Safari 12 in some resolutions¶

Solution

a) use only resolutions which passes WebRTC Camera Resolution test

b) use external camera supporting resolutions as needed in MacOS Safari

c) disable resolution constraints normalization and set width and height as ideal, see example above.

9. Non-latin characters in stream name should be encoded¶

Symptoms

Non-latin characters in stream name are replaced to questionmarks on server side

Solution

Use JavaScript function encodeURIComponent() while publishing stream

10. In some cases, server can not parse H264 stream encoded with CABAC¶

Symptoms

WebRTC H264 stream publishing does not work

Solution

a) use lower encoding profile

b) Enable VP8 on server side

exclude H264 for publishing or playing on client side

publishStream = session.createStream({

...

stripCodecs: "h264,H264"

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

});

publishStream.publish();

Note that stream transcoding is enabled on server when stream published as H264 is played as VP8 and vice versa.

11. WebRTC playback may not work in Firefox on MacOS Cataline¶

Symptoms

System warning "libgmpopenh264.dylib" can't be opened because it is from an identified developer is displayed, H264 WebRTC stream is not playing

Solution

Firefox uses a third-party library unsigned by the developer to work with H264. In accordance with macOS Catalina security policies, this is prohibited. To add an exception, go to System Preferences - Security & Privacy - General - Allow apps downloaded from - App Store and identified developers, find "libgmpopenh264.dylib" was blocked from opening because it is not from an identified developer and click Open Anyway

12. ice_keep_alive_enabled=true parameter is not used it latest WCS builds¶

Since build 5.2.672, the parameter

is not used. ICE keep alive timeout is started automatically if WCS starts sending STUN keep alives first, for example while incoming SIP call or while pushing WebRTC stream to another server

13. MacOS Safari 14.0.2 (MacOS 11) does not publish stream from MacBook FaceTimeHD camera with aspect ratio 4:3¶

Symptoms

After stream publishing starts in the examples Two Way Streaming, Stream Recording etc, a browser stops sending video packets in 10 seconds, the black screen is shown on player side, publishing fails due to video traffic abcense

Solution

a) Publish stream with aspect ratio 16:9 (for example, 320x180, 640x360 etc)

publishStream = session.createStream({

...

constraints: {

video: {

width: 640,

height: 360

},

audio: true

}

}).on(STREAM_STATUS.PUBLISHING, function (publishStream) {

...

});

publishStream.publish();

b) Update Web SDK to build 0.5.28.2753.153 or newer, where default resolution for Safari browser is adopted to 16:9

c) Update MacOS to build 11.3.1, Safari to build 14.1 (16611.1.21.161.6)

14. Streams published on Origin server in CDN may not be played from Edge if some H264 encoding profiles are excluded¶

Symptoms

WebRTC H264 stream published on Origin is playing as audio only from Edge with VP8 codec shown in stream metrics

Solution

If some H264 encoding profiles are excluded on Origin

the allowed H264 profiles should be explicitly set on Egde with the following parameter

15. MacOS Safari 14.0.* stops sending video packets after video in muted and then unmuted due to Webkit bug¶

Symptoms

When muteVideo() then unmuteVideo() are applied, the publishing stops after a minute with Failed by Video RTP activity error

Solution

Update MacOS to build 11.3.1, Safari to build 14.1 (16611.1.21.161.6), in this build the Webkit bug seems to be fixed, the problem is not reproducing

16. When publishing from Google Pixel 3/3XL, the picture is strongly distorted in some resolutions¶

Symptoms

A local video is displaying normally, but is playing with a strong distortion (transverse stripes)

Solution

Avoid the following resolutions while publishing stream from Google Pixel 3/3XL:

- 160x120

- 1920x1080

17. iOS Safari 15.1 requires from another side to enable image orientation extension when publishing H264 stream¶

Symptoms

Webpage crashes in iOS Safari 15.1 when stream publishing is started (Webkit bugs https://bugs.webkit.org/show_bug.cgi?id=232381 and https://bugs.webkit.org/show_bug.cgi?id=231505)