Using RTMP encoder¶

To run online broadcasts you can use special hardware or software video capturing devices (Live Encoder). Such devices or programs capture a video stream and send it to the server via the RTMP protocol.

Web Call Server can receive an RTMP video stream from such a device or software (Wirecast, ffmpeg, OBS Studio, FMLE etc.) encoded to H.264 + AAC or Sorenson Spark + Speex and broadcast this video stream to browsers and mobile devices.

Overview¶

Technical specifications¶

- Receiving incoming audio and video streams via the RTMP protocol

- Broadcasting of the received video stream to browsers and platforms: any of supported by WCS

- Uses video stream playback technologies: any of supported by WCS

Codec support¶

- Video H.264 + audio AAC

- Video Sorenson Spark + audio Speex 16 kHz

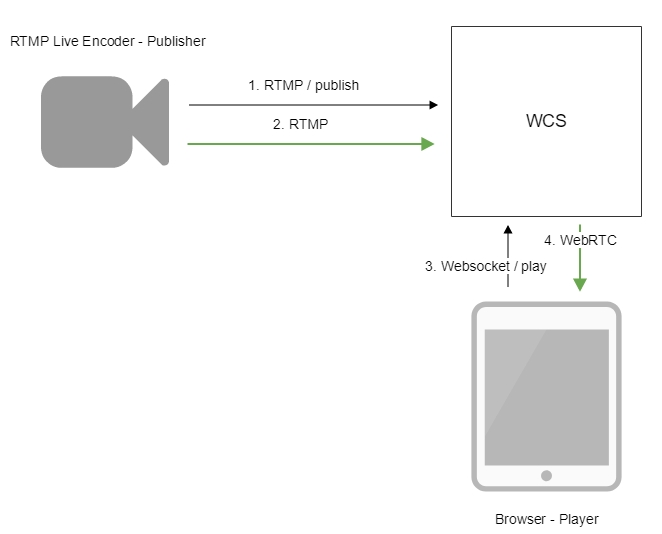

Operation flowchart¶

- Live Encoder establishes a connection to the server via the RTMP protocol and sends the

publishcommand. - Live Encoder sends the RTMP stream to the server.

- The browser establishes a connection via Websocket and sends the

playStreamcommand. - The browser receives the WebRTC stream and plays that stream on the page.

Quick manual on testing¶

- For test we use:

- WCS server

- OBS Studio

-

Player web application in Chrome browser to stream playback

-

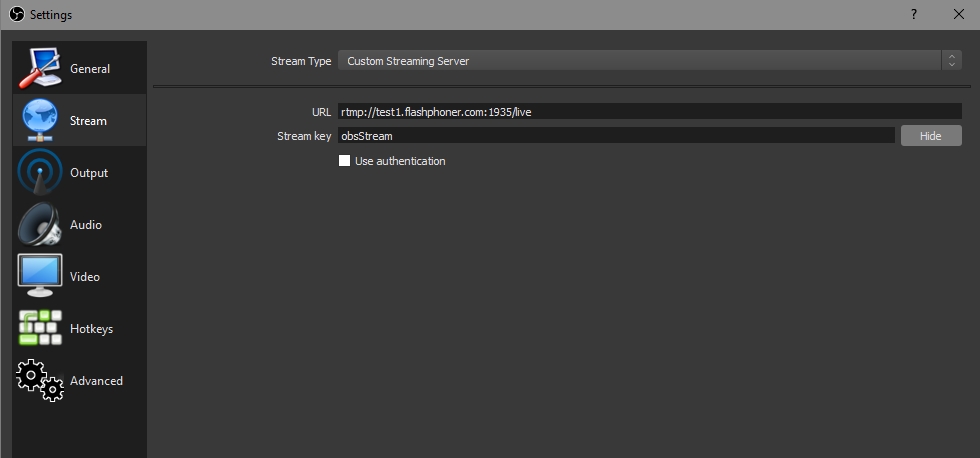

Set up RTMP strteaming to the server address, for example

rtmp://test1.flashphoner.com:1935/live/, set the stream keyobsStream:

-

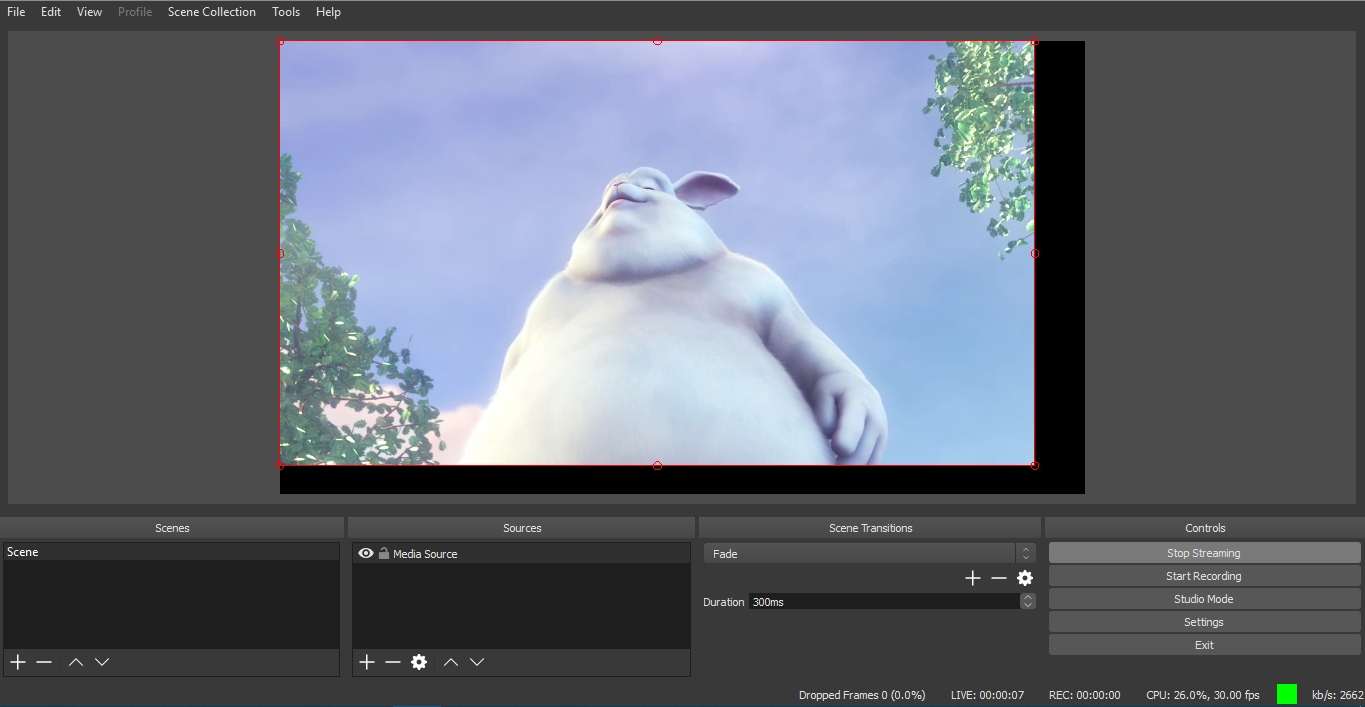

Start streaming in OBS Studio:

-

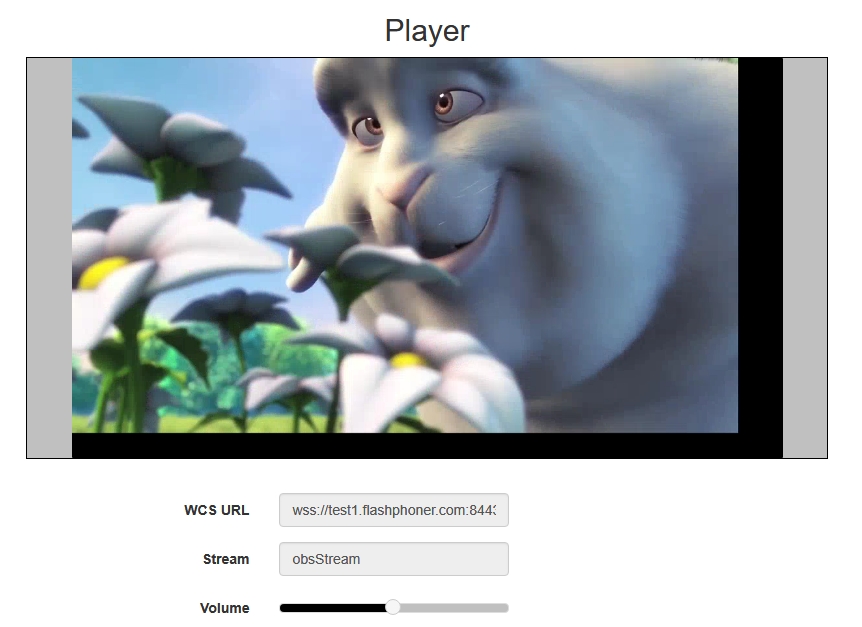

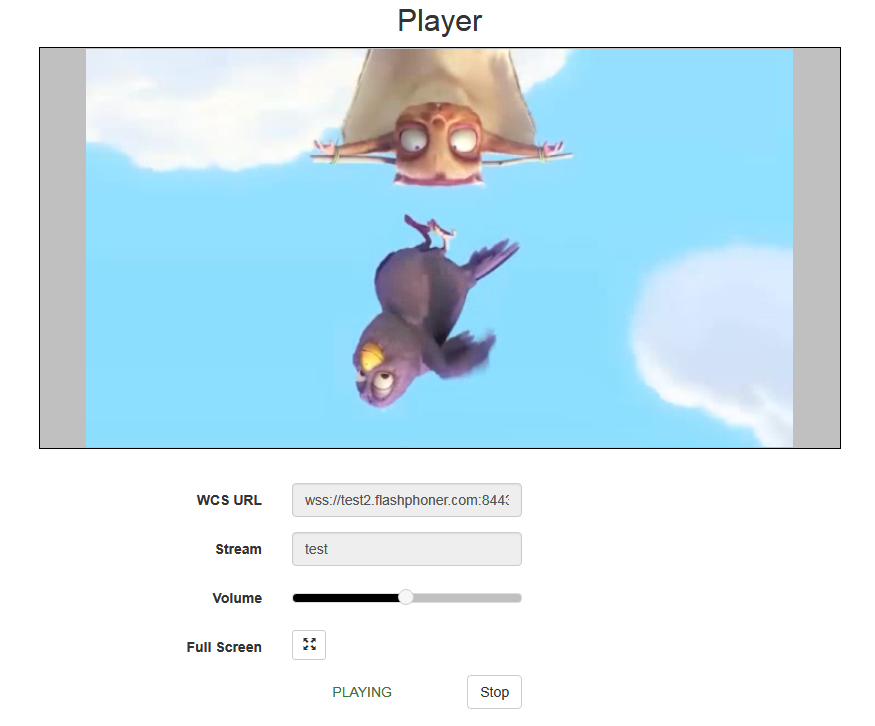

Open Player application. Set the stream key in

Streamfield and clickStartbutton. The stream captured playback begins:

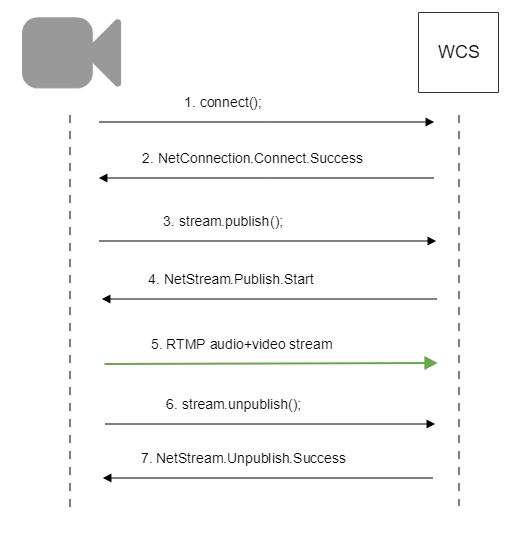

Call flow¶

Below is the call flow when an RTMP stream is published from an external source (Live Encoder) to the WCS server

Parsing stream URL parameters¶

When RTMP stream is published or played on WCS, RTMP connection and stream parameters may be set in stream URL like this:

rtmp://host:1935/live?connectParam1=val1&connectParam2=val2/streamName?streamParam1=val1&streamParam2=val2

Where

hostis WCS server hostname;connectParam1,connectParam2are RTMP connection parameters;streamNameis stream name on server;streamParam1,streamParam2are stteam parameters.

WCS server passes the parameters to backend server in REST hook in custom field, for example:

URL:http://localhost:8081/apps/EchoApp/connect

OBJECT:

{

"nodeId" : "Qb3rAjf3lzoy6PEl1WZkUhRG1DsTykgj@192.168.1.1",

"appKey" : "flashStreamingApp",

"sessionId" : "/127.0.0.1:5643/192.168.1.1:1935",

"useWsTunnel" : false,

"useWsTunnelPacketization2" : false,

"useBase64BinaryEncoding" : false,

"keepAlive" : false,

"custom" : {

"connectParam1" : "val1",

"connectParam2" : "val2"

},

"login" : "rQq83sodiCPY0pJXCxGO"

}

URL:http://localhost:8081/apps/EchoApp/publishStream

OBJECT:

{

"nodeId" : "Qb3rAjf3lzoy6PEl1WZkUhRG1DsTykgj@192.168.1.1",

"appKey" : "flashStreamingApp",

"sessionId" : "/127.0.0.1:5643/192.168.1.1:1935",

"mediaSessionId" : "627990f9-8fe5-4e92-bb2a-863cc4eb43de",

"name" : "stream1",

"published" : true,

"hasVideo" : false,

"hasAudio" : true,

"status" : "NEW",

"record" : true,

"width" : 0,

"height" : 0,

"bitrate" : 0,

"minBitrate" : 0,

"maxBitrate" : 0,

"quality" : 0,

"mediaProvider" : "Flash",

"custom" : {

"streamParam1" : "val1",

"streamParam2" : "val2"

}

}

This feature can be used for example to authenticate client on backend server while publishing or playing RTMP-stream on WCS server.

Connection parameters passing as stream parameters¶

In some cases it is necessary to pass RTMP connection parameters as stream parameters, authentication parameter for example

This feature is enabled by the following setting

In this case, the RTMP URL example above will be interpreted as

Setting a server application while RTMP stream publishing¶

While publishing RTMP stream to WCS server, a server application can be set that will be used to backend server interaction. It can be done with parameter in stream URL:

Where

hostis WCS server;key1is application key on WCS server;streamNameis stream name to publish

By default, if application key parameter is not set, the standard application flashStreamingApp will be used.

Besides, an application can be explicitly specified as stream URL part. To do this, the following parameter in flashphoner.properties file should be set

Then application key must be set in stream URL as

In this case, live is also an application name, therefore when stream is published with URL

live application must be defined on WCS server.

Sorenson Spark + Speex 16 kHz stream publishing¶

WCS server can capture RTMP stream encoded with Sorenson Spark + Speex 16kHz to FLV container. This stream can be published, for example, using ffmpeg as follows:

ffmpeg -re -i BigBuckBunny.flv -preset ultrafast -ar 16000 -ac 1 -acodec speex -vcodec flv -strict -2 -f flv rtmp://test1.flashphoner.com:1935/live/test

Known limits¶

-

To handle such stream including stream recording, the stream will be transcoded to H.264 + AAC.

-

Payload types 127 for video and 97 for audio should be set in SDP when publishing such stream, for example

v=0

o=- 1988962254 1988962254 IN IP4 0.0.0.0

c=IN IP4 0.0.0.0

t=0 0

a=sdplang:en

m=video 0 RTP/AVP 127

a=rtpmap:127 FLV/90000

a=sendonly

m=audio 0 RTP/AVP 97 8 0

a=rtpmap:97 SPEEX/16000

a=rtpmap:8 PCMA/8000

a=rtpmap:0 PCMU/8000

a=sendonly

RTMP connection activity checking¶

In some cases, if RTMP encoder does not support Keep Alive packets sending, or Keep Alives are disabled due to another reason with the following parameter

it is necessary to control RTMP connection and close it when no data was transmitted for a long time. To do this, use the following parameters.

Media traffic checking¶

Since build 5.2.533, RTP activity checking for RTMP streams can be enabled with the following parameter in flashphoner.properties file:

Read timeout¶

Read timeout is set with the following parameter in flashphoner.properties file:

In this case RTMP connection will be closed if no data was received in last 120 seconds.

Write timeout¶

Write timeout is set with the following parameter

In this case RTMP connection will be closed if no data was sent in last 120 seconds.

Read and write timeout¶

Read and write timeout is set with the following parameter

In this case RTMP connection will be closed if no data was received and sent in last 120 seconds.

RTMP stream picture rotation¶

When publishing RTMP stream to WCS, stream picture can be rotated using RTMP metadata. It may be useful for picture handling from mobile publishers.

To turn a picture to a certain angle RTMP metadata containing orientation field should be sent. The following orientations are supported:

| Orientation value | Rotation angle, degrees |

|---|---|

| 0 | 0 |

| 1 | 90 |

| 2 | 180 |

| 3 | 270 |

A picture is rotated clockwise.

Set up¶

To enable stream rotation, set the following parameter in flashphoner.properties file:

Attention

Stream rotation works for transcoded streams only

Testing¶

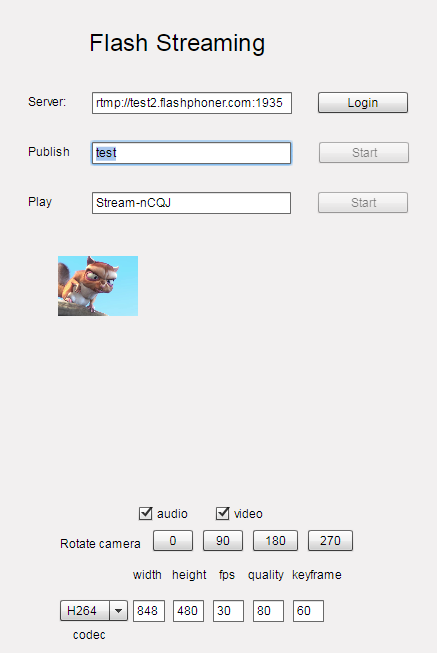

- For test we use:

- WCS server with picture rotation enabled

- Flash Streaming application to publish and rotate the stream

-

Player application to play the stream

-

Open Flash Streaming application. Set stream name

testand desired stream publishing parameters:

-

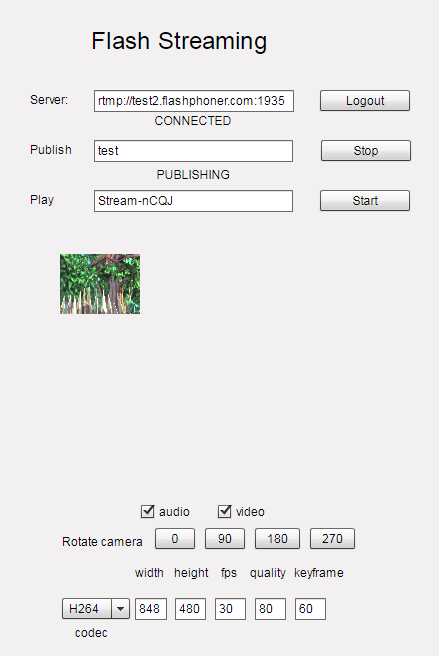

Click

LoginthenStart. Stream publishing begins

-

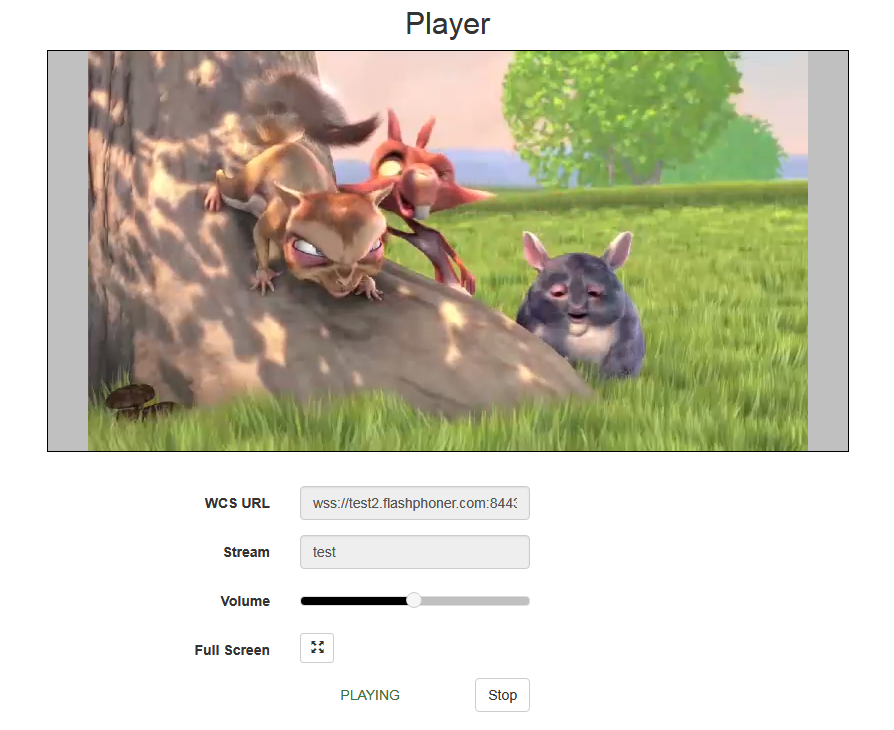

Open Player application in anoter tab or browser, play the stream named

test

-

In Flash Streaming application click

180button inRotate camerasection. The stream rotated to 180 degrees clockwise will be displayed in Player application

To developer¶

Stream orientation metadata sending is implemented in Flash Streaming application as follows:

private function rotate(degree:Number):void {

var metaDataObj:Object = new Object();

switch(degree) {

case 0:

Logger.info("rotate camera to 0");

metaDataObj.orientation = 0;

break;

case 90:

Logger.info("rotate camera to 90");

metaDataObj.orientation = 1;

break;

case 180:

Logger.info("rotate camera to 180");

metaDataObj.orientation = 2;

break;

case 270:

Logger.info("rotate camera to 270");

metaDataObj.orientation = 3;

break;

default:

metaDataObj.orientation = 0;

break;

}

sendMetaData(metaDataObj);

}

private function sendMetaData(data:Object):void{

if (publishStream != null) {

publishStream.send("@setDataFrame", "onMetaData", data);

}

}

Note that orientation value should be sent but not angle itself.

How to rotate stream published from ffmpeg¶

ffmpeg RTMP encoder allows to send orientation metadata to WCS server using command line switches:

ffmpeg -i input.mp4 -metadata:s:v rotate=90 -vcodec copy -acodec copy -strict -2 -f flv rtmp://test1.flashphoner.com:1935/live/stream_ffmpeg

Note that ffmpeg sends orientation value but not angle itself.

Decoding buffer size management¶

When RTMP stream is published from software encoder supportin hardware acceleration on NVIDIA GPU, and contains B-frames, the stream picture can twitch in some RTMP or HLS players. To workaround this, since build 5.2.863 the parameter was added to set the maximum decoding buffer size passed in SPS

By default, buffer size is not limited. This eliminates picture twitching, but can inscrease latency due to excessive buffering. In this case, the buffer size can be limited to 2 frames (default value before build 5.2.863)

or more frames to eliminate twitching without viewable latency.

Incoming RTMP stream buffering¶

RTMP stream published with high resolution and bitrate may be played non smoothly via WebRTC with freezes or low FPS if publishers channel is unstable. Incoming stream should be buffered to prevent playback issues

Adaptive RTMP incoming buffer has the following parameters to tune:

| Parameter | Description | Default value |

|---|---|---|

rtmp_in_buffer_start_size |

Minimum buffer volume to start, ms | 300 |

rtmp_in_buffer_initial_size |

Maxumum buffer volume, ms | 2000 |

rtmp_in_buffer_max_bufferings_allowed |

Maximum bufferings amout allowed | -1 (unlimited) |

rtmp_in_buffer_polling_time |

Buffer polling period, ms | 100 |

rtmp_in_buffer_overflow_allowed_deviation |

Maximum difference between minimum and maximum buffer volumes used, ms | 1000 |

rtmp_in_buffer_overflow_deviation_window |

Window size to collect the difference, ms | 30000 |

rtmp_in_buffer_overflow_rate |

Maximum buffer overflow rate | 0.15 |

rtmp_in_buffer_clear_threshold |

Clear all the data exceeding maxumum buffer size when buffer reaches the threshold, ms | 30000 |

Stop stream buffering if stream delay grows¶

RTMP stream timestamps may significally differ from server time, and stream may give a delay if there is not enough systrem perfomance for software stream encoder, or if channel bandwidth decreases. Buffering of such stream will give a periodic freezes while playing it. Since build 5.2.1311 the following parameter was added to disable buffering and pass incoming traffic directly to server engine

By default, RTMP traffic will always be buffered if buffering is enabled. Buffering can be disabled when stream delay reaches a value defined in milliseconds

In this case, buffer is cleared and goes to the PASSTHROUGH state. Even if stream delay becomes lower, buffer will remain in the state, and stream will not be buffered until publishing is stopped.

Recommended RTMP stream buffering settings for high quality publishing¶

The following buffering settings are recommended when publishing a high quality RTMP streams (high bitrate, HD, FullHD resolutions)

rtmp_in_buffer_enabled=true

rtmp_in_buffer_start_size=1000

rtmp_in_buffer_initial_size=3000

rtmp_in_buffer_polling_time=30

rtmp_in_buffer_max_bufferings_allowed=-1

In this case an RTMP stream published should play smoothly for viewers (if channel quality is good enough) and in mixer.

Detect stream parameters by metadata or media traffic¶

By default, a possible RTMP publishing parameters are set according to SDP settings file. Since build 5.2.1862 the following option is added to enable automatic stream publishing parameters detection by stream metadata or media traffic data

The option is enabled by default. In this case, WCS changes SDP according to a stream metadata received or according to media information from the stream media packets received.

Since build 5.2.1935 it is possible to setup a maximum time to detect stream metadata by media packets received

The default timeout is 1000 ms.

Known issues¶

1. A stream containing B-frames does not play or plays with artifacts (latencies, lags)¶

Symptoms

- a stream sent by the RTMP encoder does not play or plays with latencies or lags

- warnings in the client log:

Solution

- change the encoder settings so, that B-frames were not used (lower encoding profile, specify in the command line etc)

- transcode the stream, in this case there will be no B-frames in transcoded stream

2. AAC frames of type 0 are not supported by decoder and will be ignored while stream pulled playback¶

Symptoms

There are warnings in the client log:

3. When publishing and then playing and recording H264 + AAC stream video may be out of sync with sound, or no sound at all.¶

Symptoms

When playing H264 + AAC stream published on server, and when recording such stream, sound is out of sync with video or absent

Solution

a) set the following parameter in flashphoner.properties file

This parameter also turns off AAC frames dropping.

b) use Fraunhofer AAC codec

4. Sound may be distorted or absent when resampled to 11025 Hz¶

Symptoms

When H264 + AAC stream published on WCS server is played with AAC sample rate 11025 Hz, sound is distorted or absent

Solution

Do not use 11025 Hz sample rate, or escape AAC sound resampling to this rate, for example, do not set this sample rate in SDP settings.

5. Some RTMP functions does not supported and will be ignored¶

FCSubscribeFCPublishFCUnpublishonStatusonUpstreamBasereleaseStream

6. Some RTMP-encoders does not support Keep Alive¶

Symptoms

Disconnection occurs often while stream publishing with RTMP-encoder.

Solution

Switch Keep Alive off for RTMP on the server using the following parameter in flashphoner.properties file

7. When stream published with RTMP encoder is played as HLS, freezes may occur if GOP is not multiple of FPS of file published¶

Symptoms

Freezes occur when RTMP stream is played as HLS

Solution

In RTMP encoder settings, assign GOP to value equal or multiple of FPS of file published. For example, when publishing file with FPS 25 set GOP to 50.

8. When stream published with RTMP encoder is played as WebRTC, stream audio has low quality¶

Symptoms

Sound is good enogh for speech but not for music when RTMP is played as WebRTC

Solution

Set Opus encoding bitrate as published one, for example

if RTMP stream is published with audio bitrate 160 kbps

9. High CPU load when stream H264+speex is published (for example, using Flash client) with audio transcoding¶

Symptoms

High CPU load while audio transcoding from speex to AAC or Opus

10. Stream with unsupported codecs cannot be published¶

Symptoms

RTMP stream with MP3 or AC3 audio is not publishing with the following warnings in server log

Solution

Reencode stream to supported codecs using appropriate encoder settings